This guide describes the Maya features for accelerating playback and manipulation of animated scenes. It covers key concepts, shares best practices/usage tips, and lists known limitations that we aim to address in subsequent versions of Maya.

This guide will be of interest to riggers, TDs, and plug-in authors wishing to take advantage of speed enhancements in Maya.

If you would like an overview of related topics prior to reading this document, check out Supercharged Animation Performance in Maya 2016.

Starting from Maya 2016, Maya accelerates existing scenes by taking better advantage of your hardware. Unlike previous versions of Maya, which were limited to node-level parallelism, Maya now includes a mechanism for scene-level analysis and parallelization. For example, if your scene contains different characters that are unconstrained to one another, Maya can evaluate each character at the same time.

Similarly, if your scene has a single complex character, it may be possible to evaluate rig sub-sections simultaneously. As you can imagine, the amount of parallelism depends on how your scene has been constructed. We will get back to this later. For now, let’s focus on understanding key Maya evaluation concepts.

At the heart of Maya’s new evaluation architecture is an Evaluation Manager (EM), responsible for handling the parallel-friendly representation of your scene. It maintains (and updates while the scene is edited) a few data structures (described below) used for efficient evaluation.

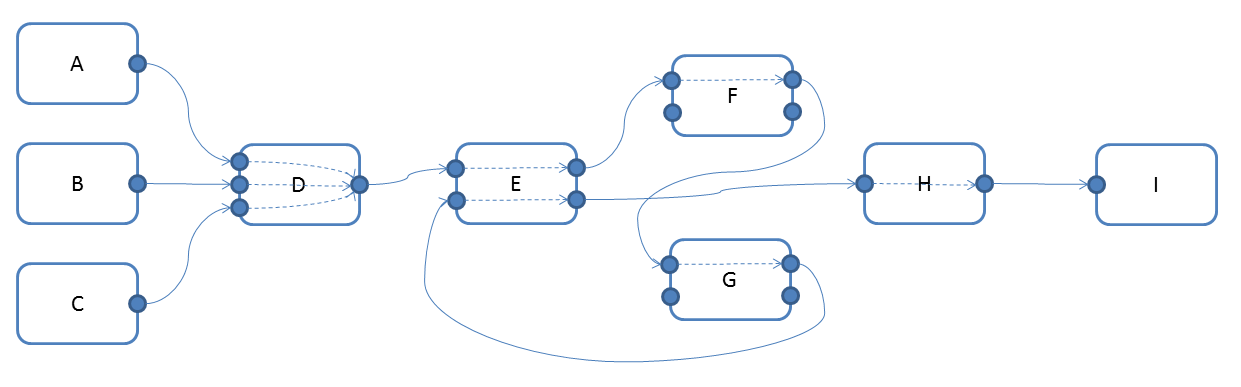

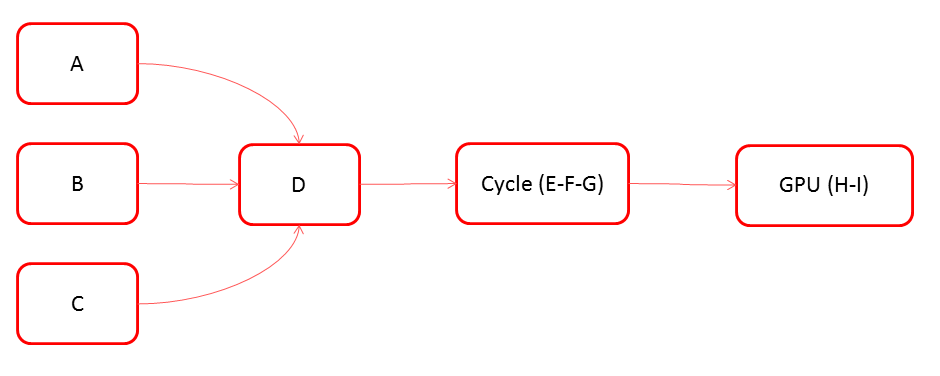

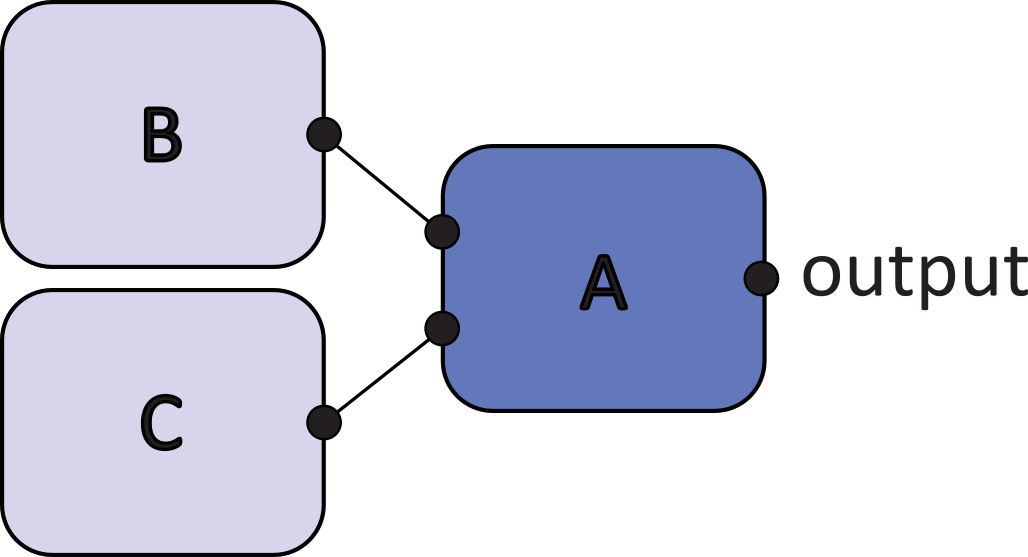

The basic description of the scene is the Dependency Graph (DG), consisting of DG nodes and connections. Nodes can have multiple attributes, and instances of these attributes on a specific node are called plugs. The DG connections are at the plug level, that is, two nodes can be connected to one another multiple ways through different plugs. Generally speaking, these connections represent data flow through the nodes as they evaluate. The following image shows an example DG:

The dotted arrows inside the nodes represent an implicit computation dependency between an output attribute (on the right of the node) and the input attributes (on the left) being read to compute the result stored in the output.

Before Parallel Maya, the DG was used to evaluate the scene using a Pull Model or Pull Evaluation. In this model, the data consumer (for instance the renderer) queries data from a given node. If the data is already evaluated, the consumer receives it directly. However, if the data is dirty, the node must first recompute it. It does so by pulling on the inputs required to compute the requested data. These inputs can also be dirty, in which case the evaluation request will then be forwarded to those dirty sources until it reaches the point where the data can be evaluated. The result then propagates back up in the graph, as the data is being “pulled”.

This evaluation model relies on the ability to mark node data as invalid and therefore requiring new evaluation. This mechanism is known as the Dirty Propagation in which the invalid data status propagates to all downstream dependencies. The two main cases where dirty propagation happened in the Pull Evaluation model were when:

The Pull Evaluation model is not well suited for efficient parallel evaluation because of potential races that can arise from concurrent pull evaluations.

To have tighter control over evaluation, Maya now uses a Forward Evaluation model to enable concurrent evaluation of multiple nodes. The general idea is simple: if all a node’s dependencies have been evaluated before we evaluate the given node, pull evaluation will not be triggered when accessing evaluated node data, so evaluation remains contained in the node and is easier to run concurrently.

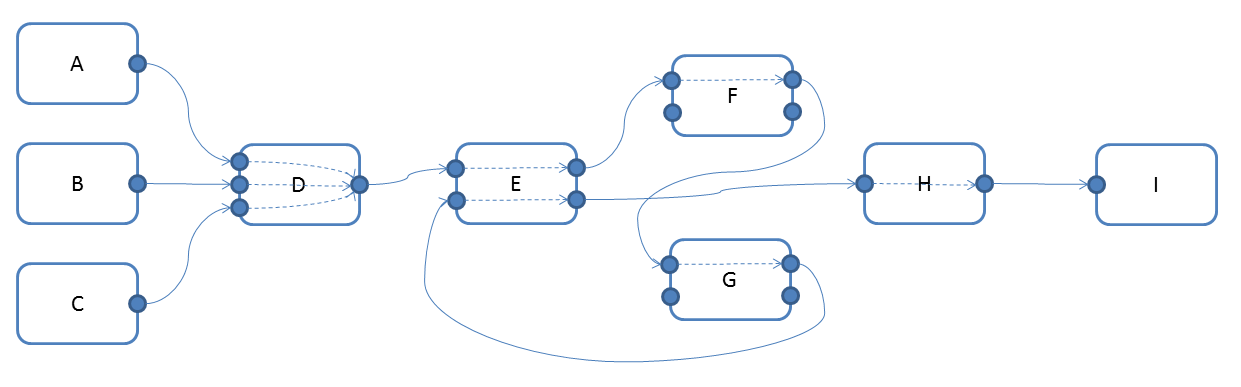

All data dependencies between the nodes must be known to apply this evaluation model, and this information is captured in the Evaluation Graph (EG), containing Evaluation Nodes. The EM uses dirty propagation to capture dependency information between the nodes, as well as which attributes are animated. EG connections represent node-level dependencies; destination nodes employ data from source nodes to correctly evaluate the scene. One important distinction between the DG and the EG is that the former uses plug-level connections, while the latter uses node-level connections. For example, the previous DG would create the following EG:

A valid EG may not exist or become invalid for various reasons. For example, you have loaded a new scene and no EG has been built yet, or you have changed your scene, invalidating a prior EG. However, once the EG is built, unlike previous versions of Maya that propagated dirty on every frame, Maya now disables dirty propagation, reusing the EG until it becomes invalid.

Tip. If your scene contains expression nodes that use

getAttr, the DG graph will be missing explicit dependencies. This results in an incorrect EG. Expression nodes also reduce the amount of parallelism in your scenes (see Scheduling Types for details). Consider removinggetAttrfrom expressions and/or using utility nodes.

While the EG holds the dependency information, it is not ready to be evaluated concurrently as-is. The EM must first create units of work that can be scheduled, that is, tasks. The main types of task created are:

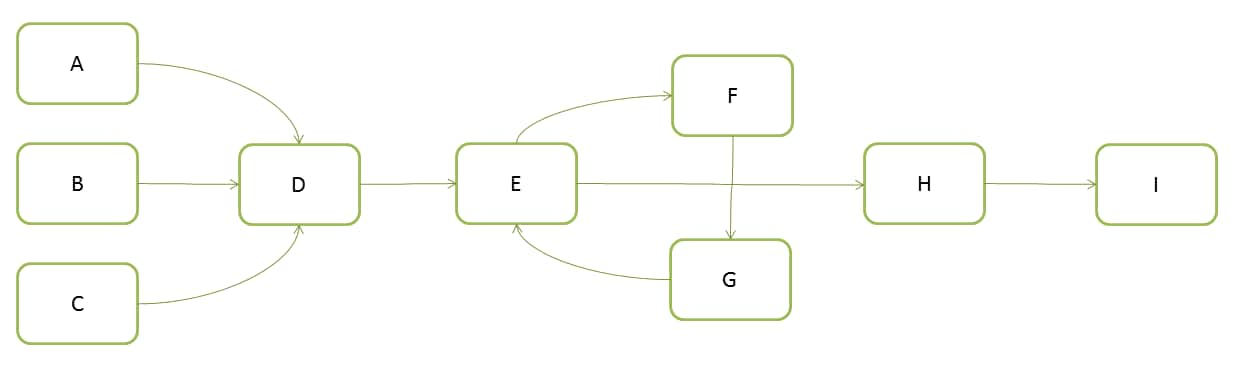

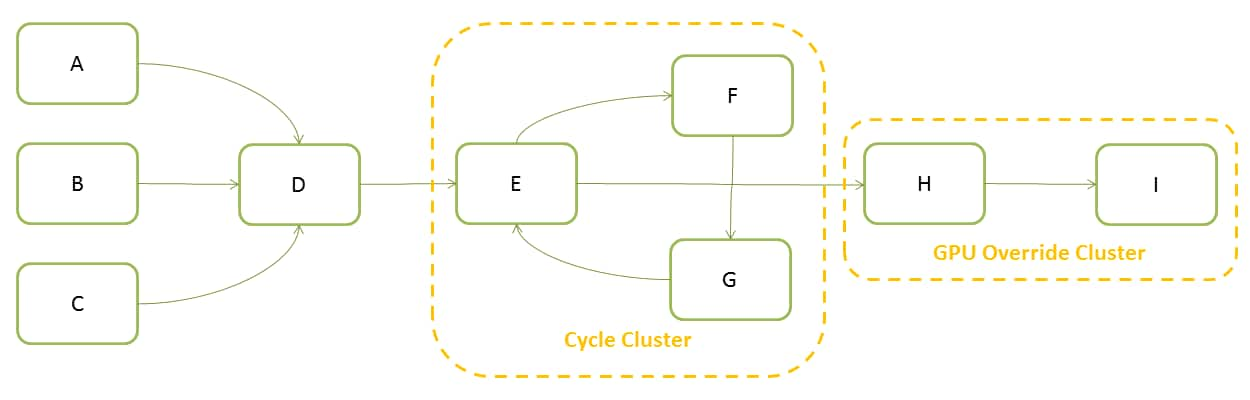

This step, called partitioning, is where the EM creates the individual pieces of work that will have to be executed. Each of these tasks will map to a Scheduling Node in the Scheduling Graph (SG), where connections represent dependencies between the tasks:

The SG is an acyclic graph, otherwise it would be impossible to schedule nodes in a cycle since there would be no starting point for which all dependencies could be evaluated. In addition to the dependencies that come directly from the EG, the SG can have additional scheduling constraints to prevent concurrent evaluation of subsets of nodes (see Scheduling Types for details).

Starting in Maya 2016, 3 evaluation modes are supported:

| Mode | What does it do? |

|---|---|

| DG | Uses the legacy Dependency Graph-based evaluation of your scene. This was the default evaluation mode prior to Maya 2016 |

| Serial | Evaluation Manager Serial mode. Uses the EG but limits scheduling to a single core. Serial mode is a troubleshooting mode to pinpoint the source of evaluation errors. |

| Parallel | Evaluation Manager Parallel mode. Uses the EG and schedules evaluation across all available cores. This mode is the new Maya default since 2016. |

When using either Serial or Parallel EM modes, you can also activate GPU Override to accelerate deformations on your GPU. You must be in Viewport 2.0 to use this feature (see GPU Override).

To switch between different modes, go to the Preferences window (Windows > Settings/Preferences > Preferences > Animation). You can also use the evaluationManager MEL/Python command; see documentation for supported options.

To see the evaluation options that apply to your scene, turn on the Heads Up Display Evaluation options (Display > Heads Up Display > Evaluation).

Before discussing how to make your Maya scene faster using Parallel evaluation, it is important to ensure that evaluation in DG and EM modes generates the same results. If you see different results in the viewport during animation (as compared to previous versions of Maya), or tests reveal numerical errors, it is critical to understand the cause of these errors. Errors may be due to an incorrect EG, threading related problems, or other issues.

Below, we review Evaluation Graph Correctness and Thread Safety, two important concepts to understand errors.

If you see evaluation errors, first test your scene in Serial evaluation mode (see Supported Evaluation Modes). Serial evaluation mode uses the EM to build an EG of your scene, but limits evaluation to a single core to eliminate threading as the possible source of differences. Note that since Serial evaluation mode is provided for debugging, it has not been optimized for speed and scenes may run slower in Serial than in DG evaluation mode. This is expected.

If transitioning to Serial evaluation eliminates errors, this suggests that differences are most likely due to threading-related issues. However, if errors persist (even after transitioning to Serial evaluation) this suggests that the EG is incorrect for your scene. There are a few possible reasons for this:

Custom Plugins. If your scene uses custom plug-ins that rely on the mechanism provided by the MPxNode::setDependentsDirty function to manage attribute dirtying, this may be the source of problems. Plug-in authors sometimes use MPxNode::setDependentsDirty to avoid expensive calculations in MPxNode::compute by monitoring and/or altering dependencies and storing computed results for later re-use.

Since the EM relies on dirty propagation to create the EG, any custom plug-in logic that alters dependencies may interfere with the construction of a correct EG. Furthermore, since the EM evaluation does not propagate dirty messages, any custom caching or computation in MPxNode::setDependentsDirty is not called while the EM is evaluating.

If you suspect that your evaluation errors are related to custom plug-ins, temporarily remove the associated nodes from your scene and validate that both DG and Serial evaluation modes generate the same result. Once you have made sure this is the case, revisit the plug-in logic. The API Extensions section covers Maya SDK changes that will help you adapt plug-ins to Parallel evaluation.

Another debugging option is to use “scheduling type” overrides to force custom nodes to be scheduled more conservatively. This approach enables the use of Parallel evaluation even if only some of the nodes are thread-safe. Scheduling types are described in more detail in the Thread Safety section.

Errors in Autodesk Nodes. Although we have done our best to ensure that all out-of-the-box Autodesk Maya nodes correctly express dependencies, sometimes a scene uses nodes in an unexpected manner. If this is the case, we ask you make us aware of scenes where you encounter problems. We will do our best to address problems as quickly as possible.

Prior to Maya 2016, evaluation was single-threaded and developers did not need to worry about making their code thread-safe. At each frame, evaluation was guaranteed to proceed serially and computation would finish for one node prior to moving onto another. This approach allowed for the caching of intermediate results in global memory and using external libraries without considering their ability to work correctly when called simultaneously from multiple threads.

These guarantees no longer apply. Developers working in recent versions of Maya must update plug-ins to ensure correct behavior during concurrent evaluation.

Two things to consider when updating plug-ins:

Different instances of a node type should not share resources. Unmanaged shared resources can lead to evaluation errors since different nodes, of the same type, can have their compute() methods called at the same time.

Avoid non thread-safe lazy evaluation. In the EM, evaluation is scheduled from predecessors to successors on a per-node basis. Once computation has been performed for predecessors, results are cached, and made available to successors via connections. Any attempt to perform non-thread safe lazy evaluation could return different answers to different successors or, depending on the nature of the bug, instabilities.

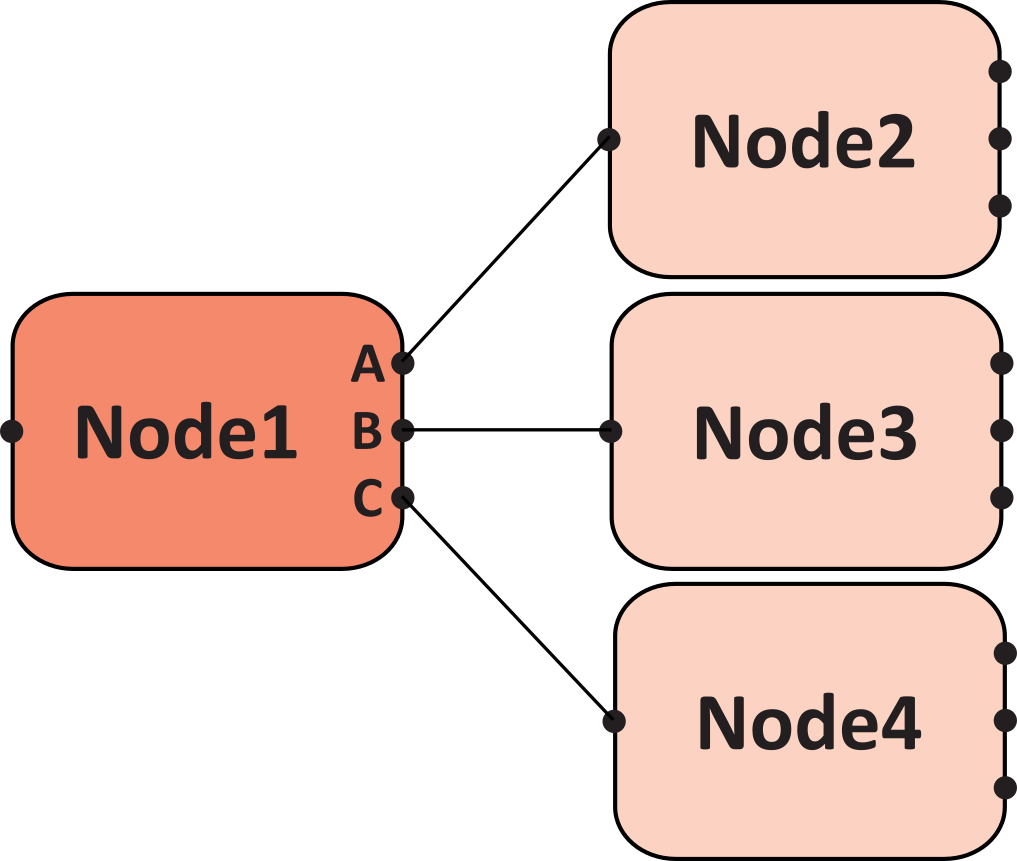

Here’s a concrete example for a simple node network consisting of 4 nodes:

In this graph, evaluation first calculates outputs for Node1 (that is, Node1.A, Node1.B, Node1.C), followed by parallel evaluation of Nodes 2, 3, and 4 (that is, Read Node1.A to use in Node2, Read Node1.B to use in Node3, and so on).

Knowing that making legacy code thread-safe requires time, we have added new scheduling types to provide control over how the EM schedule nodes. Scheduling types provide a straightforward migration path, so you do not need to hold off on performance improvements, just because a few nodes still need work.

There are 4 scheduling types:

| Scheduling Type | What are you telling the scheduler? |

|---|---|

| Parallel | Asserts that the node and all third-party libraries used by the node are thread-safe. The scheduler may evaluate any instances of this node at the same time as instances of other nodes without restriction. |

| Serial | Asserts it is safe to run this node with instances of other nodes. However, all nodes with this scheduling type should be executed sequentially within the same evaluation chain. |

| Globally Serial | Asserts it is safe to run this node with instances of other node types but only a single instance of this node type should be run at a time. Use this type if the node relies on static state, which could lead to unpredictable results if multiple node instances are simultaneously evaluated. The same restriction may apply if third-party libraries store state. |

| Untrusted | Asserts this node is not thread-safe and that no other nodes should be evaluated while an instance of this node is evaluated. Untrusted nodes are deferred as much as possible (that is, until there is nothing left to evaluate that does not depend on them), which can introduce costly synchronization. |

By default, nodes scheduled as Serial provide a middle ground between performance and stability/safety. In some cases, this is too permissive and nodes must be downgraded to GloballySerial or Untrusted. In other cases, some nodes can be promoted to Parallel. As you can imagine, the more parallelism supported by nodes in your graph, the higher level of concurrency you are likely to obtain.

Tip. When testing your plug-ins with Parallel Maya, a simple strategy is to schedule nodes with the most restrictive scheduling type (that is, Untrusted), and then validate that evaluation produces correct results. Raise individual nodes to the next scheduling level, and repeat the experiment.

There are three ways to alter the scheduling level of your nodes:

Evaluation Toolkit. Use this tool to query or change the scheduling type of different node types.

C++/Python API methods. Use the OpenMaya API to specify the desired node scheduling by overriding the MPxNode::schedulingType method. This function should return one of the enumerated values specified by MPxNode::schedulingType. See the Maya MPxNode class reference for more details.

MEL/Python Commands. Use the evaluationManager command to change the scheduling type of nodes at runtime. Below, we illustrate how you can change the scheduling of scene transform nodes:

| Scheduling Type | Command |

|---|---|

| Parallel | evaluationManager -nodeTypeParallel on "transform" |

| Serial | evaluationManager -nodeTypeSerialize on "transform" |

| GloballySerial | evaluationManager -nodeTypeGloballySerialize on "transform" |

| Untrusted | evaluationManager -nodeTypeUntrusted on "transform" |

The Evaluation Toolkit and MEL/Python Commands method to alter node scheduling level works using node type overrides. They add an override that applies to all nodes of a given type. Using C++/Python API methods and overriding the MPxNode::schedulingType function gives the flexibility to change the scheduling type for each node instance. For example, expression nodes are marked as globally serial if the expression outputs are a purely mathematical function of its inputs.

The expression engine is not thread-safe so only one expression can run at a time, but it can run in parallel with any other nodes. However, if the expression uses unsafe commands (expressions could use any command to access any part of the scene), the node is marked as untrusted because nothing can run while the expression is evaluated.

This changes the way scheduling types should be queried. Using the evaluationManager command with the above flags in query mode will return whether an override has been set on the node type, using either the Evaluation Toolkit or the MEL/Python commands.

The Evaluation Toolkit window lets you query both the override type on the node type (which cannot vary from one node of the same type to the other), or the actual scheduling type used for a node when building the scheduling graph (which can change from one node instance to the other).

On rare occasions you may notice that Maya switches from Parallel to Serial evaluation during manipulation or playback. This is due to Safe Mode, which attempts to trap errors that possibly lead to instabilities. If Maya detects that multiple threads are attempting to simultaneously access a single node instance, evaluation will be forced to Serial execution to prevent problems.

Tip. If Safe Mode forces your scene into Serial mode, the EM may not produce the expected incorrect results when manipulating. In such cases you can either disable the EM:

cmds.evaluationManager(mode="off")or disable EM-accelerated manipulation:

cmds.evaluationManager(man=0)

While Safe Mode exposes many problems, it cannot catch them all. Therefore, we have also developed a special Analysis Mode that performs a more thorough (and costly) check of your scene. Analysis mode is designed for riggers/TDs wishing to troubleshoot evaluation problems during rig creation. Avoid using Analysis Mode during animation since it will slow down your scene.

As previously described, the EG adds necessary node-level scheduling information to the DG. To make sure evaluation is correct, it is critical the EG always be up-to-date, reflecting the state of the scene. The process of detecting things that have changed and rebuilding the EG is referred to as graph invalidation.

Different actions may invalidate the EG, including:

Other, less obvious, actions include:

Frequent graph invalidations may limit parallel evaluation performance gains or even slow it down (see Idle Actions), since Maya requires DG dirty propagation and evaluation to rebuild the EG. To avoid unwanted graph rebuilds, consider adding 2 keys, each with slightly different values, on rig attributes that you expect to use frequently. You can also lock static channels to prevent creation of static animation curves during keying. We expect to continue tuning this area of Maya, with the goal of making the general case as interactive as possible.

In this section, we discuss the different idle actions available in Maya that helps rebuild the EG without any intervention from the user. Prior to Maya 2019, only one idle action, the EG rebuild, was available, but it was not enabled by default. Since Maya 2019, we have added another idle action, the EG preparation for manipulation, and both of these are enabled by default.

Here is a description of the idle actions:

| Idle Action | Description |

|---|---|

| EG Rebuild | Builds the graph topology. This idle action is executed after a file load operation, or after a graph topology invalidation. |

| EG Preparation for manipulation | Partitions and schedules the graph. This idle action is executed after a graph rebuild (either manually or through the idle action), or after a partitioning invalidation. |

Tip. You can use the evaluationManager command to change which idle actions are enabled. You can enable and disable both idle actions individually.

To make use of the Parallel Evaluation and GPU deformation during manipulation, the EG needs to be properly built, partitioned and scheduled, otherwise it will revert to DG. These idle actions allow the EG to automatically build and be ready to use when needed, since they are triggered at file load and after graph invalidation.

If you use Cached Playback, your cache automatically refills, too. This way, you can start playing from cache as soon as the scene is loaded or after you modify to the scene.

In a typical frame evaluation, temporary values that are set on keyed attributes are restored to their original values, that is, the values on their associated curves. With the idle actions, this is an unwanted behavior, otherwise you would not be able to do any modifications to keyed attributes. To circumvent that issue, we had to add some special behaviors. One of these is the dirty propagation from stale plugs after an idle preparation for manipulation. When not in idle preparation for manipulation, this operation is done during the partitioning and scheduling phase. With idle preparation for manipulation, this operation is done at the next complete evaluation. Therefore, if you have many static curves, you might experience a slowdown on the first frame of playback.

If you do frequent operations that invalidate the graph or partitioning, you may experience some slowdowns due to the graph always being rebuilt. In such cases, it is advised that you disable the offending idle action until you are done.

This section describes how the Evaluation Manager can be used to accelerate interactive manipulation.

Before providing accelerated manipulation, the Evaluation Manager must validate that the requested manipulation can safely be evaluated in parallel. This requires the manipulated attributes, along with all their downstream dependencies, to be known to the Evaluation Manager and therefore be part of the Evaluation Graph. Since the Evaluation Graph is built for the animated attributes, this typically means that accelerated manipulation is available for animated attributes.

Tip. You can use the controller command to identify objects that are used as animation sources in your scene. If the Include controllers in evaluation graph option is set (see Windows > Settings/Preferences > Preferences, then Settings > Animation), the objects marked as controllers will automatically be added to the evaluation graph even if they are not animated yet. This allows Parallel evaluation for manipulation even if they have not yet been keyed.

See the Curve Manager Evaluator section for more advanced graph prepopulation.

Before allowing accelerated manipulation, the Evaluation Manager runs a prevalidation phase to make sure all the manipulated attributes are supported. If they are, accelerated manipulation can be allowed right away and happens on the very first frame of manipulation.

However, Manipulation Prevalidation can fail for a number of reasons: some of the manipulated attributes might not be known to the Evaluation Manager, only some of the channels of a compound attribute (like translate) might be animated, non-trivial controller setups might be used, etc. If that is the case, the Evaluation Manager will resort to dirty propagation from the manipulated attributes to validate whether this dirty propagation only touches nodes and attributes that are known to the Evaluation Manager. While this step is more expensive, it is the ground truth to determine whether or not it is safe to evaluate the result of the manipulation in parallel. Manipulation Prevalidation can be thought of as an optimization attempting to recognize supported patterns to avoid this expensive step in a safe subset of the use cases.

Tip. It is possible to disable Manipulation Prevalidation using the Evaluation Toolkit. In the 01) Modes section, in Advanced, uncheck the Manipulation Prevalidation checkbox. Note that this should only be used for debugging purposes and should never be required.

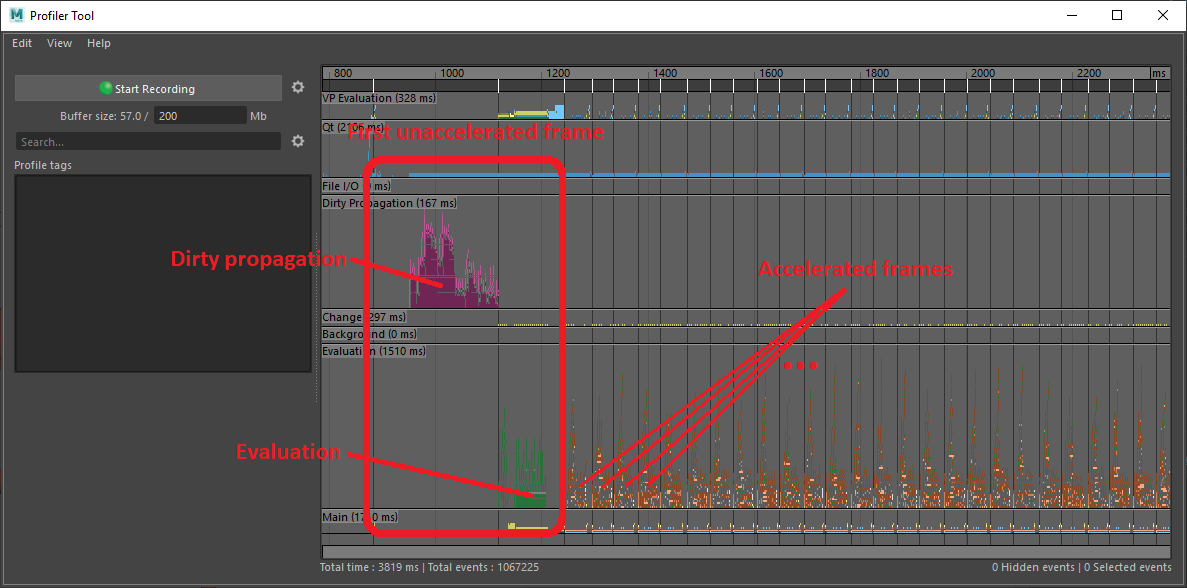

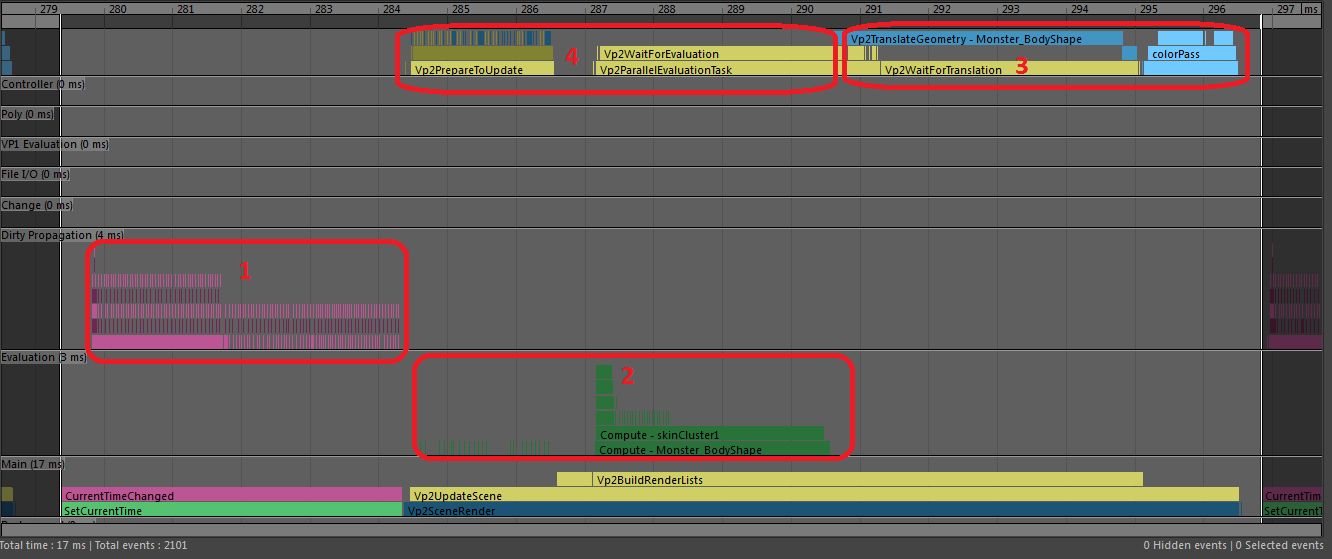

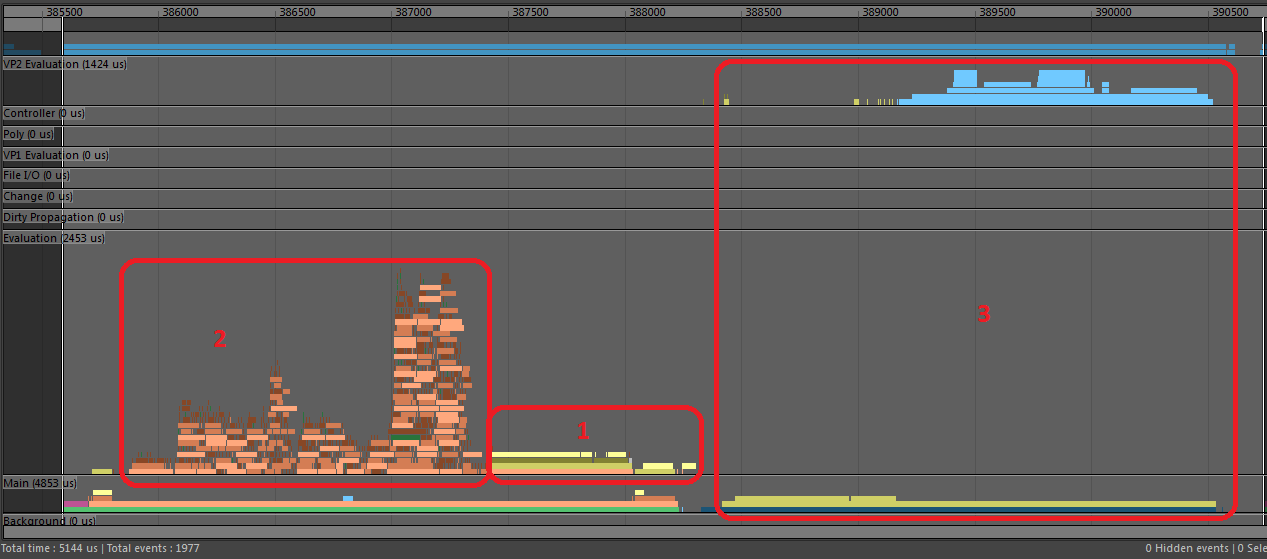

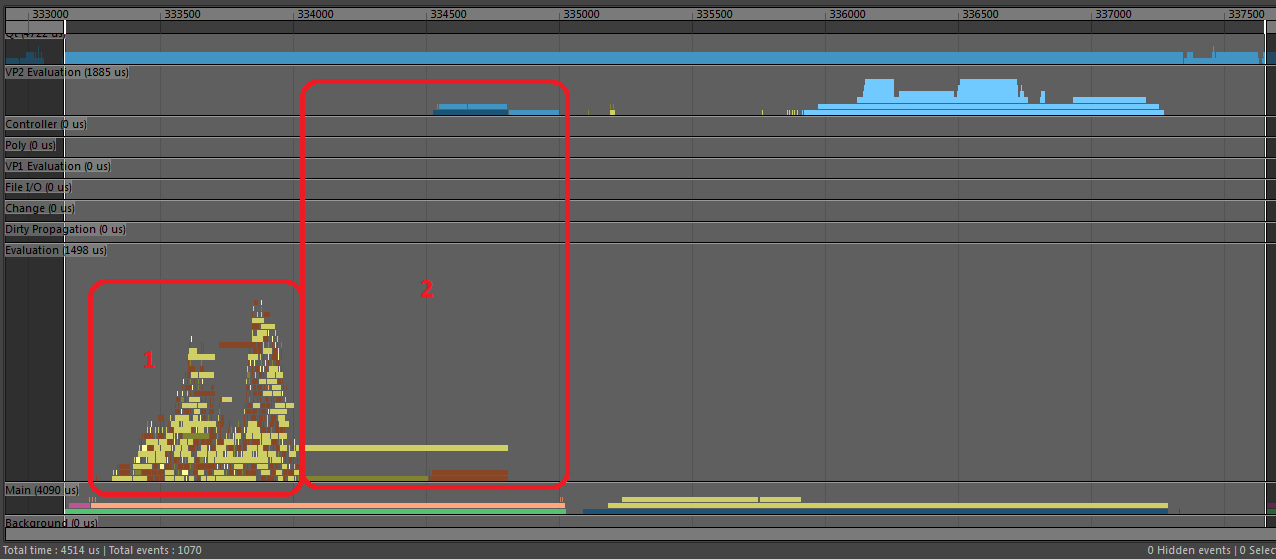

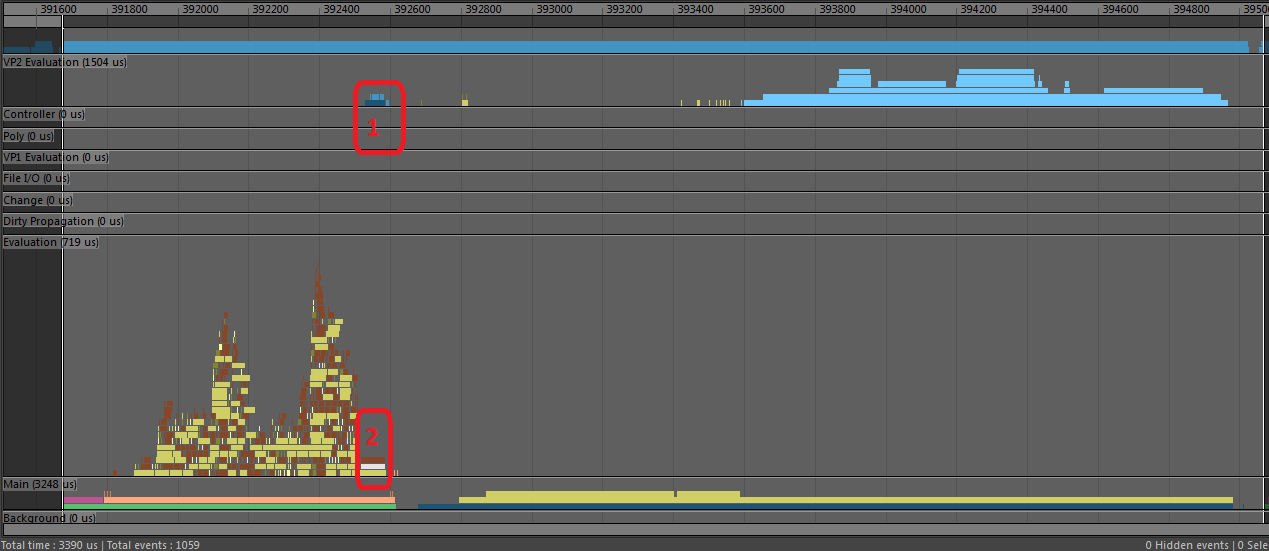

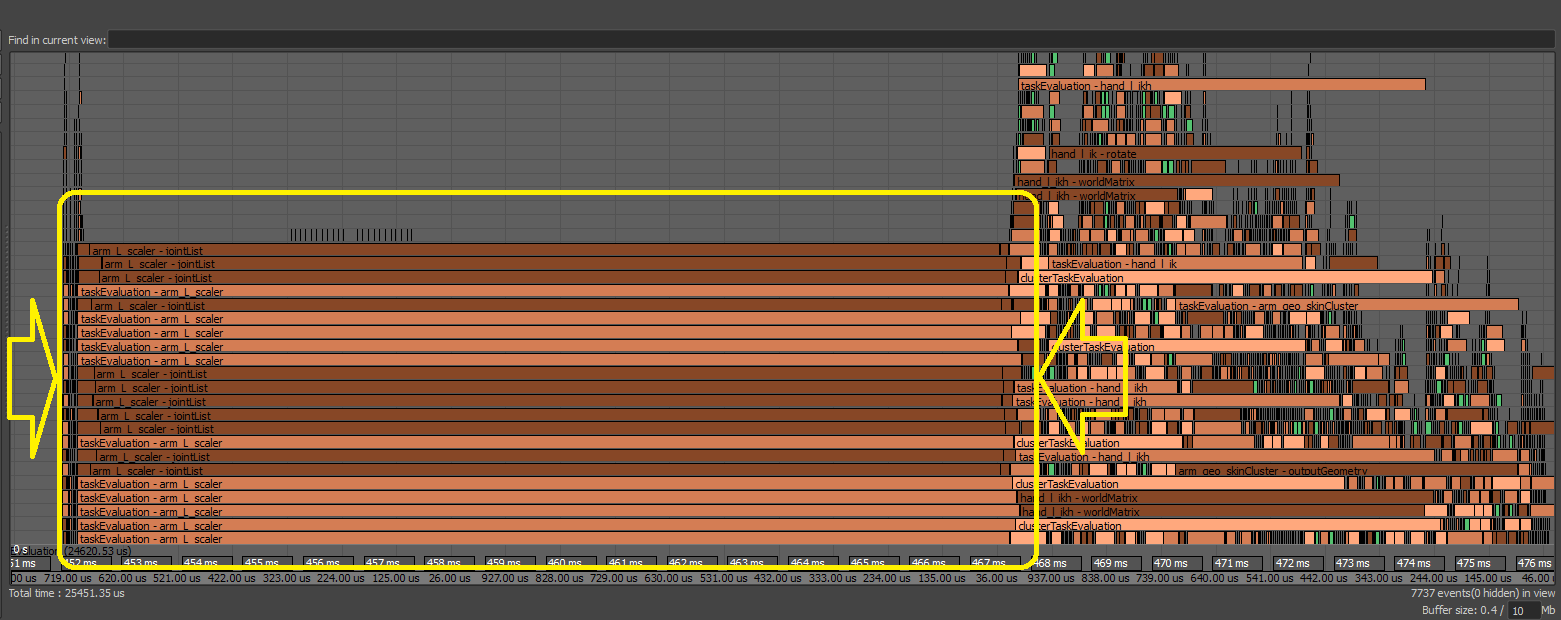

The following profiler image illustrates what happens when Manipulation Prevalidation fails to determine whether it is safe or not to perform accelerated manipulation. The first evaluated frame will happen using serial DG evaluation: first, a phase of dirty propagation which will be monitored to determine the safety of running subsequent frames in parallel; second, pull evaluation. If the dirty propagation phase confirms accelerated manipulation can be done safely, following frames will be fully accelerated.

Accelerated manipulation does not evaluate all nodes inside the Evaluation Graph. Instead, it tries to do the minimum amount of work, i.e. only evaluate the nodes affected by the manipulated attributes. This is known as partial evaluation.

The concept is fairly simple: during an accelerated manipulation frame, only the nodes marked for partial evaluation will be computed. Initially, the manipulated nodes are marked, and then all downstream nodes (i.e. all affected nodes) are marked recursively.

For instance, in a multi-character scene, partial evaluation makes sure only the manipulated character will be recomputed, instead of every character in the scene.

In this section, we describe mechanisms to perform targeted evaluation of node sub-graphs. This approach is used by Maya to accelerate deformations on the GPU and to catch evaluation errors for scenes with specific nodes. Maya 2017 also introduced new Open API extensions, allowing user-defined custom evaluators.

Tip. Use the evaluator command to query the available/active evaluators or modify currently active evaluators. Some evaluators support using the nodeType flag to filter out or include nodes of certain types. Query the info flag on the evaluator for more information on what it supports.

# Returns a list of all currently available evaluators. import maya.cmds as cmds cmds.evaluator( query=True ) # Result: [u'invisibility', u'frozen', ... u'transformFlattening', u'pruneRoots'] # # Returns a list of all currently enabled evaluators. cmds.evaluator( query=True, enable=True ) # Result: [u'invisibility', u'timeEditorCurveEvaluator', ... u'transformFlattening', u'pruneRoots'] #

Note: Enabling or disabling custom evaluators only applies to the current Maya session: the state is not saved in the scene nor in the user preferences. The same applies to configuration done using the evaluator command and the configuration flag.

Maya contains a custom deformer evaluator that accelerates deformations in Viewport 2.0 by targeting deformation to the GPU. GPUs are ideally suited to tackle problems such as mesh deformations that require the same operations on streams of vertex and normal data. We have included GPU implementations for several of the most commonly-used deformers in animated scenes: blendShape, cluster, createColorSet, deltaMush, ffd, groupParts, mesh, morph, nonLinear, polyColorPerVertex, polyNormalPerVertex, polySmoothFace, polyTweakUV, proximityWrap, sculpt, skinCluster, softMod, solidify, tension, tweak and wire.

Tip. Use the deformerEvaluator command to query the available GPU deformers.

# Returns a list of all currently available GPU deformers. import maya.cmds as cmds sorted(cmds.deformerEvaluator(query=True, deformers=True)) # Result: [u'blendShape', u'cluster', ... u'tweak', u'wire'] #

Unlike Maya’s previous deformer stack that performed deformations on the CPU and subsequently sent deformed geometry to the graphics card for rendering, the GPU override sends undeformed geometry to the graphics card, performs deformations in OpenCL and then hands off the data to Viewport 2.0 for rendering without read-back overhead. We have observed substantial speed improvements from this approach in scenes with dense geometry.

Even if your scene uses only supported deformers, GPU override may not be enabled due to the use of unsupported node features in your scene. For example, all deformers will be pulled out of GPU evaluation if the weightFunction attribute is animated or if the current geometry is used to compute the weights (instead of the original geometry).

Additional deformer-specific limitations are listed below:

| Deformer | Limitation(s) |

|---|---|

blendShape |

Pulls out of GPU deformation if partial component is used. The following attribute values are ignored:

|

cluster |

n/a |

createColorSet |

Only a passthrough, does not actually affect deformation |

deltaMush |

Pulls out of GPU deformation if original geometry is animated or if any of the following attributes is animated:

|

ffd |

n/a |

groupParts |

Only a passthrough, does not actually affect deformation |

mesh |

Required as part of geometry chains, does not actually affect deformation |

morph |

Pulls out of GPU deformation if original geometry is animated |

nonLinear |

Default setup creates a cycle preventing GPU deformation |

polyColorPerVertex |

Only a passthrough, does not actually affect deformation |

polyNormalPerVertex |

Only a passthrough, does not actually affect deformation |

polySmoothFace |

Pulls out of GPU deformation if division level is not 0 |

polyTweakUV |

Only a passthrough, does not actually affect deformation |

proximityWrap |

Pulls out of GPU deformation if original geometry is animated |

sculpt |

n/a |

skinCluster |

The following attribute values are ignored:

|

softMod |

|

solidify |

Pulls out of GPU deformation if original geometry is animated |

tension |

Pulls out of GPU deformation if original geometry is animated |

tweak |

|

wire |

Pulls out of GPU deformation if holder curves are present |

A few other reasons that can prevent GPU override from accelerating your scene:

Meshes not sufficiently dense. Unless meshes have a large number of vertices, it is still faster to perform deformations on the CPU. This is due to the driver-specific overhead incurred when sending data to the GPU for processing. For deformations to happen on the GPU, your mesh needs over 500/2000 vertices, on AMD/NVIDIA hardware respectively. Use the MAYA_OPENCL_DEFORMER_MIN_VERTS environment variable to change the threshold. Setting the value to 0 sends all meshes connected to supported deformation chains to the GPU.

Downstream graph nodes required deformed mesh results. Since GPU read-back is a known bottleneck in GPGPU, no node, script, or Viewport can read the mesh data computed by the GPU override. This means that GPU override is unable to accelerate portions of the EG upstream of deformation nodes, such as follicle or pointOnPolyConstraint, that require information about the deformed mesh. We will re-examine this limitation as software/hardware capabilities mature. When diagnosing GPU Override problems, this situation may appear as an unsupported fan-out pattern. See deformerEvaluator command, below, for details.

Animated Topology. If your scene animates the number of mesh edges, vertices, and/or faces during playback, corresponding deformation chains are removed from the GPU deformation path.

Maya Catmull-Clark Smooth Mesh Preview is used. We have included acceleration for OpenSubDiv (OSD)-based smooth mesh preview, however there is no support for Maya’s legacy Catmull-Clark. To take advantage of OSD OpenCL acceleration, select OpenSubDiv Catmull-Clark as the subdivision method and make sure that OpenCL Acceleration is selected in the OpenSubDiv controls.

Unsupported streams are found. Depending on which drawing mode you select for your geometry (for example, shrunken faces, hedge-hog normals, and so on) and the material assigned, Maya must allocate and send different streams of data to the graphics card. Since we have focused our efforts on common settings used in production, GPU override does not currently handle all stream combinations. If meshes fail to accelerate due to unsupported streams, change display modes and/or update the geometry material.

Back face culling is enabled.

Driver-related issues. We are aware of various hardware issues related to driver support/stability for OpenCL. To maximize Maya’s stability, we have disabled GPU Override in the cases that will lead to problems. We expect to continue to eliminate restrictions in the future and are actively working with hardware vendors to address detected driver problems.

You can also increase support for new custom/proprietary deformers by using new API extensions (refer to Custom GPU Deformers for details).

If you enable GPU Override and the HUD reports Enabled (0 k), this indicates that no deformations are happening on the GPU. There could be several reasons for this, such as those mentioned above.

To troubleshoot factors that limit the use of GPU override for your particular scene, use the deformerEvaluator command. Supported options include:

| Command | What does it do? |

|---|---|

deformerEvaluator |

Prints the chain or each selected node is not supported. |

deformerEvaluator -chains |

Prints all active deformation chains. |

deformerEvaluator -meshes |

Prints a chain for each mesh or a reason if it is not supported. |

Starting in Maya 2017, the dynamics evaluator fully supports parallel evaluation of scenes with Nucleus (nCloth, nHair, nParticles), Bullet, and Bifrost dynamics. Legacy dynamics nodes (for example, particles, fluids) remain unsupported. If the dynamics evaluator finds unsupported node types in the EG, Maya will revert to DG-based evaluation. The dynamics evaluator also manages the tricky computation necessary for correct scene evaluation. This is one of the ways custom evaluators can be used to change Maya’s default evaluation behavior.

The dynamics evaluator supports several configuration flags to control its behavior.

| Flag | What does it do? |

|---|---|

disablingNodes |

specifies the set of nodes that will force the dynamics evaluator to disable the EM. Valid values are: legacy2016, unsupported, and none. |

handledNodes |

specifies the set of nodes that are going to be captured by the dynamics evaluator and scheduled in clusters that it will manage. Valid values are: dynamics and none. |

action |

specifies how the dynamics evaluator will handle its nodes. Valid values are: none, evaluate, and freeze. |

In Maya 2017, the default configuration corresponds to:

cmds.evaluator(name="dynamics", c="disablingNodes=unsupported")

cmds.evaluator(name="dynamics", c="handledNodes=dynamics")

cmds.evaluator(name="dynamics", c="action=evaluate")where unsupported (that is, blacklisted) nodes are:

This configuration disables evaluation if any unsupported nodes are encountered, and performs evaluation for the other handled nodes in the scene.

To revert to Maya 2016 / 2016 Extension 2 behavior, use the configuration:

cmds.evaluator(name="dynamics", c="disablingNodes=legacy2016")

cmds.evaluator(name="dynamics", c="handledNodes=none")

cmds.evaluator(name="dynamics", c="action=none")where unsupported (that is, blacklisted) nodes are:

Tip. To get a list of nodes that cause the dynamics evaluator to disable the EM in its present configuration, use the following command:

cmds.evaluator(name="dynamics", valueName="disabledNodes", query=True)

You can configure the dynamics evaluator to ignore unsupported nodes. If you want to try Parallel evaluation on a scene where it is disabled because of unsupported node types, use the following commands:

cmds.evaluator(name="dynamics", c="disablingNodes=none")

cmds.evaluator(name="dynamics", c="handledNodes=dynamics")

cmds.evaluator(name="dynamics", c="action=evaluate")Note: Using the dynamics evaluator on unsupported nodes may cause evaluation problems and/or application crashes; this is unsupported behavior. Proceed with caution.

Tip. If you want the dynamics evaluator to skip evaluation of all dynamics nodes in the scene, use the following commands:

cmds.evaluator(name="dynamics", c="disablingNodes=unsupported") cmds.evaluator(name="dynamics", c="handledNodes=dynamics") cmds.evaluator(name="dynamics", c="action=freeze")This can be useful to quickly disable dynamics when the simulation impacts animation performance.

Dynamics simulation results are very sensitive to evaluation order, which may differ between DG and EM-based evaluation. Even for DG-based evaluation, evaluation order may depend on multiple factors. For example, in DG-mode when rendering simulation results to the Viewport, the evaluation order may be different than when simulation is performed in ‘headless mode’. Though EM-based evaluation results are not guaranteed to be identical to DG-based, evaluation order is consistent; once the evaluation order is scheduled by the EM, it will remain consistent regardless of whether results are rendered or Maya is used in batch. This same principle applies to non-dynamics nodes that are order-dependent.

When a reference is unloaded it leaves several nodes in the scene representing reference edits to preserve. Though these nodes may inherit animation from upstream nodes, they do not contribute to what is rendered and can be safely ignored during evaluation. The reference evaluator ensures all such nodes are skipped during evaluation.

Toggling scene object visibility is a critical artist workflow used to reduce visual clutter and accelerate performance. To bring this workflow to parallel evaluation, Maya 2017 and above includes the invisibility evaluator, whose goal is to skip evaluation of any node that does not contribute to a visible object.

The invisibility evaluator will skip evaluation of DAG nodes meeting any of the below criteria:

visibility attribute is false.intermediateObject attribute is true.overrideEnabled attribute is true and overrideVisibility attribute is false.enabled attribute is true and visibility attribute is false.As of Maya 2018, the invisibility evaluator supports the isolate select method of hiding objects. If there is only a single Viewport, and it has one or more objects isolated, then all of the other, unrelated objects are considered invisible by the evaluator.

There is also support in Maya (2018 and up) for the animated attribute on expression nodes. When this attribute is set to 1, the expression node is not skipped by the invisibility evaluator, even if only invisible objects are connected to it.

Note: The default value of the

animatedattribute is 1, so in an expression-heavy scene you may see a slowdown from Maya 2017 to Maya 2018. To restore performance, run the script below to disable this attribute on all expression nodes. (It is only required when the expression has some sort of side-effect external to the connections, such as printing a message or checking a cache file size.)for node in cmds.ls( type='expression' ): cmds.setAttr( '{}.animated'.format(node), 0 )

Tip: The invisibility evaluator is off by default in Maya 2017. Use the Evaluation Toolkit or this:

cmds.evaluator(enable=True, name='invisibility')to enable the evaluator.

The invisibility evaluator only considers static visibility; nodes with animated visibility are still evaluated, even if nodes meet the above criteria. If nodes are in a cycle, all cycle nodes must be considered invisible for evaluation to be skipped. Lastly, if a node is instanced and has at least one visible path upward, then all upward paths will be evaluated.

Tip: The invisibility evaluator determines visibility solely from the node’s visibility state; if your UI or plug-in code requires invisible nodes to evaluate, do not use the invisibility evaluator.

Before Maya 2023, the invisibility evaluator was setting up its optimizations at Partitioning time. In this mode, when the Evaluation Graph is partitioned, the invisibility evaluator checks the visibility state and grabs any node that is invisible or only affecting invisible nodes. These nodes will not be evaluated in following scene evaluations, since they do not contribute to any visible content.

Performing this optimization at Partitioning time has the drawback that anytime visibility information changes, for example from an artist showing or hiding parts of the scene, the graph needs to be repartitioned. This operation can be costly with complex scenes and lead to perceivable lags in the workflow.

Maya 2023 introduced a new invisibility evaluator mode to address this: the Scheduling Mode. This mode can be set from the Evaluation Toolkit. In this mode, visibility-based optimizations are performed dynamically, without requiring a full repartitioning. When visibility state changes, some processing will happen at the next evaluation, but the overhead is considerably smaller than a full partitioning, resulting in a much smoother workflow.

Because of the more aggressive nature of the Partitioning Mode, which grabs nodes early and prevents them from being accessible to other custom evaluators, there are instances where playback performance is faster in the Partitioning Mode than in the Scheduling Mode. The Scheduling Mode, by its dynamic nature, must let the other custom evaluators set themselves up in case of a dynamic visibility change that would require those evaluators to do their work without repartitioning. As a result, due to the interaction with other custom evaluators which might grab too much, the Scheduling Mode might not be able to prevent as much evaluation as the Partitioning Mode. Therefore, there may be a trade-off between the more responsive visibility change workflows provided by the Scheduling Mode and the potentially better playback performance provided by the Partitioning Mode.

The frozen evaluator allows users to tag EG subsections as not needing evaluation. It enhances the frozen attribute by propagating the frozen state automatically to related nodes, according to the rules defined by the evaluator’s configuration. It should only be used by those comfortable with the concepts of connection and propagation in the DAG and Evaluation Graph. Many users may prefer the invisibility evaluator; it is a simpler interface/workflow for most cases.

The frozen attribute has existed on nodes since Maya 2016. It can be used to control whether node is evaluated in Serial or Parallel EM evaluation modes. In principle, when the frozen attribute is set, the EM skips evaluation of that node. However, there are additional nuances that impact whether or not this is the case:

Warning: All the frozen attribute does is skip evaluation, nothing is done to preserve the current node data during file store; if you load a file with frozen attributes set, the nodes may not have the same data as when you stored them.

The evaluation manager does not evaluate any node that has its frozen attribute set to True, referred to here as explicitly frozen nodes. An implicitly frozen node is one that is disabled because of the operation of the frozen evaluator, but whose frozen attribute is not set to True. When the frozen evaluator is enabled it will also prevent evaluation of related nodes according to the rules corresponding to the enabled options, in any combination.

The frozen evaluator operates in three phases. In phase one, it gathers together all of the nodes flagged by the invisible and displayLayers options as being marked for freezing. In phase two, it propagates the freezing state outwards through the evaluation graph according to the values of the downstream and upstream options.

The list of nodes for propagation is gathered as follows:

frozen attribute set to True are found. (Note: This does not include those whose frozen attribute is animated. They are handled via Phase 3.)The list gathered by Phase 1 will all be implicitly frozen. In addition, the downstream and upstream options may implicitly freeze nodes related to them. For each of the nodes gathered so far, the evaluation graph will be traversed in both directions, implicitly freezing nodes encountered according to the following options:

If a node has its frozen or visibility states animated, the evaluator still has to schedule it. The runtime freezing can still assist at this point in preventing unnecessary evaluation. Normally any explicitly frozen node will have its evaluation skipped, with all other nodes evaluating normally. When the runtime option is enabled, after skipping the evaluation of an explicitly frozen node no further scheduling of downstream nodes will occur. As a result, if the downstream nodes have no other unfrozen inputs they will also be skipped.

Note: The runtime option does not really modify the evaluator operation, it modifies the scheduling of nodes for evaluation. You will not see nodes affected by this option in the evaluator information (for example, the output from cmds.evaluator( query=True, clusters=True, name='frozen' ))

Options can be set for the frozen evaluator in one of two ways:

Accessing them through the Evaluation Toolkit

Using the evaluator command’s configuration option:

python cmds.evaluator( name='frozen', configuration='KEY=VALUE' )

Legal KEY and VALUE values are below, and correspond to the options as described above:

| KEY | VALUES | DEFAULT |

|---|---|---|

| runtime | True/False | False |

| invisible | True/False | False |

| displayLayers | True/False | False |

| downstream | ‘off’/‘safe’/‘force’ | ‘off’ |

| upstream | ‘off’/‘safe’/‘force’ | ‘off’ |

Unlike most evaluators the frozen evaluator options are stored in user preferences and persists between sessions.

frozen attribute to True to instruct the frozen evaluator to shut off evaluation on affected nodes. The most practical use of this would be on a display layer so that nodes can be implicitly frozen as a group.frozen attribute, or any of the attributes used to define related implicit nodes for freezing (for example, visibility) are animated then the evaluator will not remove them from evaluation. They will still be scheduled and only the runtime option will help in avoiding unnecessary evaluation.The curve manager evaluator can be used to include additional nodes in the Evaluation Graph, which can have two main benefits:

To achieve those benefits efficiently, the curve manager evaluator performs two main tasks:

To illustrate this result, let’s compare the three following situations.

The third situation is where we are trying to take advantage of the curve manager evaluator to have an Evaluation Graph that is already set up to allow parallel evaluation when the controllers will be manipulated.

The following table summarizes the differences between the situations and the compromises provided by the curve manager evaluator.

| Situation | # of nodes in EG | Playback | EM Manip | Rebuild when keying |

|---|---|---|---|---|

Static curves + curve manager off |

Lowest |

Fastest |

No |

Yes |

Animated curves |

Highest |

Slowest |

Yes |

No |

Static curves + curve manager on |

Highest |

Middle |

Yes |

No |

In summary, the curve manager evaluator benefits from having the Evaluation Graph already populated with nodes so it is ready to evaluate interactive manipulation, while paying as little of a cost as possible for those constant nodes during playback.

It can be activated using:

cmds.evaluator(

name="curveManager",

enable=True

)

cmds.evaluator(

name="curveManager",

configuration="forceAnimatedCurves=keyed"

)The available values for forceAnimatedCurves are:

Another option, forceAnimatedNodes, can be used:

This allows tagging nodes to be added with a boolean dynamic attribute. By default, the name of this attribute is forcedAnimated. If it is present on a node and set to true, the node is added to the graph. The name of the attribute can be controlled by using the “forcedAnimatedAttributeName” option.

By default, the curve manager evaluator tries to skip the evaluation of the static parts of the graph. For debugging or performance measurement purposes, this optimization can be disabled:

cmds.evaluator(

name="curveManager",

configuration="skipStaticEvaluation=disable"

)In addition to evaluators described above, additional evaluators exist for specialized tasks:

| Evaluator | What does it do? |

|---|---|

| cache | Constitutes the foundation of Cached Playback. See the Maya Cached Playback whitepaper for more information. |

| timeEditorCurveEvaluator | Finds all paramCurves connected to time editor nodes and puts them into a cluster that will prevent them from evaluating at the current time, since the time editor will manage their evaluation. |

| ikSystem | Automatically disables the EM when a multi-chain solver is present in the EG. For regular IK chains it will perform any lazy update prior to parallel execution. |

| disabling | Automatically disables the EM if user-specified nodes are present in the EG. This evaluator is used for troubleshooting purposes. It allows Maya to keep working stably until issues with problem nodes can be addressed. |

| hik | Handles the evaluation of HumanIK characters in an efficient way by recognizing HumanIK common connection patterns. |

| cycle | Unrolls cycle clusters to augment the opportunity for parallelism and improve performance. Likely gives the best performance improvements when large cycle clusters are present in the scene. Prototype, work in progress. |

| transformFlattening | Consolidates deep transform hierarchies containing animated parents and static children, leading to faster evaluation. Consolidation takes a snapshot of the relative parent/child transformations, allowing concurrent evaluation of downstream nodes. |

| pruneRoots | We found that scenes with several thousand paramCurves become bogged down because of scheduling overhead from resulting EG nodes and lose any potential gain from increased parallelism. To handle this situation, special clusters are created to group paramCurves into a small number of evaluation tasks, thus reducing overhead. |

Custom evaluator names are subject to change as we introduce new evaluators and expand these functionalities.

Sometimes, multiple evaluators will want to “claim responsibility” for the same node(s). This can result in conflict, and negatively impact performance. To avoid these conflicts, upon registration each evaluator is associated with a priority; nodes are assigned to the evaluator with the highest priority. Internal evaluators have been ordered to prioritize correctness and stability over speed.

Several API extensions and tools have been added to help you make the most of the EM in your pipeline. This section reviews API extensions for Parallel Evaluation, Custom GPU Deformers, Custom Evaluator API, VP2 Integration and Profiling Plug-ins.

If your plug-in plays by the DG rules, you will not need many changes to make the plug-in work in Parallel mode. Porting your plug-in so that it works in Parallel may be as simple as recompiling it against the latest version of OpenMaya!

If the EM generates different results than DG-based evaluation, make sure that your plug-in:

Overrides MPxNode::compute(). This is especially true of classes extending MPxTransform which previously relied on asMatrix(). See the rockingTransform SDK sample. For classes deriving from MPxDeformerNode and MPxGeometryFilter, override the deform() method.

Handles requests for evaluation at all levels of the plug tree. While the DG can request plug values at any level, the EM always requests the root plug. For example, for plug N.gp[0].p[1] your compute() method must handle requests for evaluation of N.gp, N.gp[0], N.gp[0].p, and N.gp[0].p[1].

If your plug-in relies on custom dependency management, you need to use new API extensions to ensure correct results. As described earlier, the EG is built using the legacy dirty-propagation mechanism. Therefore, optimizations used to limit dirty propagation during DG evaluation, such as those found in MPxNode::setDependentsDirty, may introduce errors in the EG. Use MEvaluationManager::graphConstructionActive() to detect if this is occurring.

There are new virtual methods you will want to consider implementing:

MPxNode::preEvaluation. To avoid performing expensive calculations each time the evaluation method MPxNode::compute() is called, one strategy plug-in authors use is to store results from previous evaluations and then rely on MPxNode::setDependentsDirty to trigger re-computation. As discussed previously, once the EG has been built, dirty propagation is disabled and the EG is re-used. Threrefore, any custom logic in your plug-in that depends on setDependentsDirty no longer applies.

MPxNode::preEvaluation allows your plug-in to determine which plugs/attributes are dirty and if any action is needed. Use the new MEvaluationNode class to determine what has been dirtied.

Refer to the simpleEvaluationNode devkit example for an illustration of how to use MPxNode::preEvaluation.

MPxNode::postEvaluation. Until now, it was difficult to determine at which point all processing for a node instance was complete. Users sometimes resorted to complex bookkeeping/callback schemes to detect this situation and perform additional work, such as custom rendering. This mechanism was cumbersome and error-prone.

Once all computations have been performed on a specific node instance, a new method, MPxNode::postEvaluation, is called. Since this method is called from a worker thread, it performs calculations for downstream graph operations without blocking other Maya processing tasks of non-dependent nodes.

See the simpleEvaluationDraw devkit example to understand how to use this method. If you run this example in regular evaluation, Maya slows down, since evaluation is blocked whenever expensive calculations are performed. When you run in Parallel Evaluation Mode, a worker thread calls the postEvaluation method and prepares data for subsequent drawing operations. When testing, you will see higher frame rates in Parallel evaluation versus regular or Serial evaluation. Please note that code in postEvaluation should be thread-safe.

Other recommended best practices include:

Avoid storing state in static variables. Store node state/settings in attributes. This has the additional benefit of automatically saving/restoring the plug-in state when Maya files are written/read.

Node computation should not have any dependencies beyond input values. Maya nodes should be like functions. Output values should be computed from input state and node-specific internal logic. Your node should never walk the graph or try to circumvent the DG.

A new method was added in Maya 2022 to provide more control over which attributes get automatically computed by the Evaluation Manager.

By default, the Evaluation Manager evaluates all attributes that are touched by dirty propagation during the construction of the Evaluation Graph, i.e. all attributes that are affected by the time, or animated. However, some attributes might not actually be needed by the Viewport. For example, a particle shape node might be able to compute a mesh representation of the particle cloud which might not always be needed. However, if this attribute is dependent on animated input, it is always computed by default.

The MEvaluationNode::skipEvaluation() method provides a way to control this behavior by requesting skipping the computation of an attribute. When this request succeeds, the Evaluation Manager simply skips the evaluation of this attribute and leaves it dirty, so it can be properly evaluated if pulled on later.

The request is not guaranteed to succeed, as the safety of skipping the evaluation of a given attribute depends on how it is connected and used. The Evaluation Manager makes sure no concurrent pull evaluation can happen on the same node by preparing everything required for downstream nodes. MEvaluationNode::skipEvaluation() relaxes this constraint. Note that this request is always rejected for attributes with two or more downstream dependencies, as they could be pulling concurrently on the skipped attribute, potentially resulting in a race condition.

This method must be called during MPxNode::preEvaluation and there are two ways to use it:

allowSingleDownstreamDependency=false: This is the safest way, as it only allows skipping evaluation of an attribute if there are no downstream connections to this attribute. As soon as there is a single downstream dependency for this attribute, the request for skipping is ignored, because it is assumed that the downstream node(s) will eventually pull on the attribute, and other computations might be happening at the same time in the node. Note that this is the safe option to choose if the node can mark more than one attribute for skipping, as leaving multiple attributes unevaluated on the same node could result in concurrent pull evaluation, even if each attribute only has a single downstream dependency.

allowSingleDownstreamDependency=true: This way allows skipping an attribute even if there is one (and not more) downstream dependency. It is presumably safe if only one other node can pull on the attribute. Therefore, even if the Evaluation Manager does not evaluate it, pull evaluation from a single downstream node can be performed safely. Note that this is only true if the attribute is the only one skipped in the node, which is why allowing single downstream dependency is usually only set to true when there is a single skippable attribute in the node.

The state of whether or not the attribute is actually marked to be skipped can be queried using MEvaluationNode::skippingEvaluation().

To make GPU Override work on scenes containing custom deformers, Maya provides new API classes that allow the creation of fast OpenCL deformer back-ends.

Though you still need to have a CPU implementation for the times when it is not possible to target deformations on the GPU (see GPU Override), you can augment this with an alternate deformer implementation inheriting from MPxGPUDeformer. This applies to your own nodes as well as to standard Maya nodes.

The GPU implementation will need to:

When you have done this, do not forget to load your plug-in at startup. Two working devkit examples (offsetNode and identityNode) have been provided to get you started.

Tip. To get a sense for the maximum speed increase you can expect by providing a GPU backend for a specific deformer, tell Maya to treat specific nodes as passthrough. Here’s an example applied to polySoftEdge:

cmds.GPUBuiltInDeformerControl( name="polySoftEdge", inputAttribute="inputPolymesh", outputAttribute="output", passthrough=True )Although results will be incorrect, this test will confirm if it is worth investing time implementing an OpenCL version of your node.

Fan-In refers to multiple GPU deformation chains “fanning in” to a single deformer node. A deformer that supports fan-in evaluation is one that can take the results of two or more GPU deformation chains as input. For example, a blendshape deformer can have both animated input geometry and animated input targets, each with their own upstream deformation chains.

Fan-In evaluation support for custom deformers is available through the API. In order to access upstream deformation data, there are two things your custom deformer must do:

The devkit example plugin basicMorphNode has been provided to demonstrate fan-in support.

API classes and methods introduced in Maya 2017 let you define custom evaluators that allow control over how the Maya scene is computed.

To create a custom evaluator, you must define a plug-in that extends the MPxCustomEvaluator class. The key class methods to override are described below.

Before you can use the new evaluators, they must be registered:

MStatus registerEvaluator(

// name of the evaluator

const char * evaluatorName,

// evaluator priority. Higher priority evaluators get 'first-dibs'

unsigned int uniquePriority,

// function pointer to method returning a new evaluator instance

MCreatorFunction creatorFunction

)and deregistered:

MStatus deregisterEvaluator(

// name of the evaluator

const char* evaluatorName

)using MFnPlugin methods. These functions should be used during plug-in initialization:

MStatus initializePlugin( MObject obj )

{

MFnPlugin plugin( obj, PLUGIN_COMPANY, "3.0", "Any");

MStatus status = plugin.registerEvaluator(

"SimpleEvaluator",

40,

simpleEvaluator::creator);

if (!status)

status.perror("registerEvaluator");

return status;

}and uninitialization:

MStatus uninitializePlugin( MObject obj )

{

MFnPlugin plugin( obj );

MStatus status = plugin.deregisterEvaluator( "SimpleEvaluator" );

if (!status)

status.perror("deregisterEvaluator");

return status;

}as illustrated above.

Once the plug-in has been loaded, use Python or MEL commands to enable:

import maya.cmds as cmds

cmds.evaluator(enable=True, name='SimpleEvaluator')

# Result: False #disable:

cmds.evaluator(enable=False, name='SimpleEvaluator')

# Result: True #and query information about evaluators:

print(cmds.evaluator(query=True))

[u'invisibility', ... u'SimpleEvaluator']NOTE: The evaluator command returns the previous state of the evaluator (as described in the documentation). This command fails if the evaluator cannot be enabled.

To view the priorities of all loaded evaluators, use the priority flag on the evaluator command:

for evaluatorName in cmds.evaluator():

print("%-25s : %d" % (

evaluatorName,

cmds.evaluator(name=evaluatorName, query=True, priority=True)))

invisibility : 1003000

frozen : 1002000

curveManager : 1001000

cache : 1000000

timeEditorCurveEvaluator : 104000

dynamics : 103000

ikSystem : 102000

disabling : 100000

hik : 7000

reference : 6000

deformer : 5000

cycle : 4000

transformFlattening : 3000

pruneRoots : 1000

SimpleEvaluator : 50This section provides more detail on different MPxCustomEvaluator API methods.

During EG partitioning, each evaluator gets to claim evaluation nodes, using the:

bool MPxCustomEvaluator::markIfSupported(const MEvaluationNode* node)method. You can safely cause evaluation in this call but doing so increases partitioning and evaluation time. The developer can decide whether evaluation is required (call .inputValue / .inputArrayValue), or the previously-evaluated datablock values can be re-used (call .outputValue / .outputArrayValue). If multiple evaluators mark a specific node, which evaluator is assigned a node at run-time is determined by priority. For example, if you have two evaluators, A and B, mark node C of interest, if evaluator A has priority 100, and evaluator B has priority 10, during graph partitioning, evaluator A will get the opportunity to grab node C before evaluator B. Evaluators should not try to grab a node already grabbed by a higher-priority evaluator.

To determine if an evaluator can evaluate clusters in Parallel, use:

MCustomEvaluatorClusterNode::SchedulingType schedulingType(

// a disjoint set of nodes on a custom evaluator layer

const MCustomEvaluatorClusterNode * cluster

)where:

| SchedulingType | Details |

|---|---|

| kParallel | any number of nodes of the same type can run in parallel |

| kSerial | all nodes of this type should be chained and executed sequentially |

| kGloballySerial | only one node of this type can be run at a time |

| kUntrusted | nothing else can execute with this node since we cannot predict what will happen |

During EG scheduling:

bool MPxCustomEvaluator::clusterInitialize(

// evaluation cluster node

const MCustomEvaluatorClusterNode* cluster

)can be used to perform the required cluster preparation. The pointer to the cluster remains valid until graph invalidation, such as when the scene topology changes.

Before the cluster is deleted,

void MPxCustomEvaluator::clusterTerminate(

// the cluster to terminate

const MCustomEvaluatorClusterNode* cluster

)is called to allow needed cleanup, for example, releasing evaluator-specific resources. It is up to the custom evaluator to decide if it wants to clear its internal representation.

There are 3 main methods used during execution.

Prior to graph execution, the EM calls:

void MPxCustomEvaluator::preEvaluate(

// the graph about to be evaluated

const MEvaluationGraph* graph

)during execution, the EM calls:

void MPxCustomEvaluator::clusterEvaluate(

// the cluster to be evaluated

const MCustomEvaluatorClusterNode* cluster

)You will only receive clusters that belong to this evaluator. This call always happens after clusterInitialize and never after clusterTerminate. Finally,

void MPxCustomEvaluator::postEvaluate(

// the graph that was evaluated

const MEvaluationGraph* graph

)is called just after a graph evaluation is finished.

Now that we have reviewed relevant API methods, the following example limits evaluation by caching previous results. simpleEvaluator assumes the existence of scene nodes that tag controller nodes with animation and works as follows:

In clusterInitialize, we build a list of translation and rotation attribute plugs.

// Build a list of plugs by scanning the scene for controller nodes.

// This gets called during scheduling.

bool simpleEvaluator::clusterInitialize(

const MCustomEvaluatorClusterNode* cluster

)

{

if (fControllerPlugs.length() == 0)

buildPlugListWithControllerTag();

return true;

}

// Scan the scene for any controller nodes, populating the plug list.

// Called during the scheduling phase

void simpleEvaluator::buildPlugListWithControllerTag()

{

MStatus stat;

MItDependencyNodes dgIter(MFn::kControllerTag, &stat);

if (stat != MS::kSuccess)

return;

const char* values[] = {

"translateX",

"translateY",

"translateZ",

"rotateX",

"rotateY",

"rotateZ"

};

for (; !dgIter.isDone(); dgIter.next())

{

MFnDependencyNode controllerTagNode(dgIter.thisNode(), &stat);

if (stat != MS::kSuccess)

continue;

MPlug currControllerTagPlug =

controllerTagNode.findPlug("controllerObject", &stat);

if (stat != MS::kSuccess)

continue;

// found controller tag node, now get its source controller

MPlugArray source;

bool retval = currControllerTagPlug.connectedTo(

source,

true /* asDst */,

false /* asSrc */,

&stat)

if ((retval == false) || (stat != MS::kSuccess))

continue;

// there should only be one source with the controller tag node

// as destination

MObject controllerNode = source[0].node(&stat);

if (stat != MS::kSuccess)

continue;

MFnDependencyNode currControllerNode(controllerNode, &stat);

if (stat != MS::kSuccess)

continue;

for (unsigned int j = 0; j < 6; j++)

{

MPlug currPlug = currControllerNode.findPlug(values[j], &stat);

if (stat == MS::kSuccess)

fControllerPlugs.append(currPlug);

else

std::cerr

<< "NO PLUG: "

<< currControllerNode.name().asChar()

<< "."

<< values[j]

<< std::endl;

}

}

}Later, during preEvaluate, which is called per-frame, a hash value is calculated based on the plug values of the current frame.

void simpleEvaluator::preEvaluate(const MEvaluationGraph* graph)

{

buildHashValue();

}

void simpleEvaluator::buildHashValue()

{

unsigned int length = fControllerPlugs.length();

MStatus stat = MS::kSuccess;

for (unsigned int i = 0; i < length; i++)

{

float value = 0;

stat = fControllerPlugs[i].getValue(value);

if (stat == MS::kSuccess)

{

boost::hash_combine(fCurrentHashValue, value);

}

else

{

std::cerr

<< "NO VALUE: "

<< fControllerPlugs[i].name().asChar()

<< std::endl;

}

}

}This value is compared with the previous frame’s hash in clusterEvaluate. If the hash is different, the evaluation proceeds, otherwise we do nothing.

void simpleEvaluator::clusterEvaluate(

const MCustomEvaluatorClusterNode* cluster

)

{

if (fOldHashValue != fCurrentHashValue)

cluster->evaluate();

}To make sure the hash value is up-to-date, the hash value is stored in postEvaluate.

void simpleEvaluator::postEvaluate(const MEvaluationGraph* graph)

{

fOldHashValue = fCurrentHashValue;

fCurrentHashValue = 0;

}Finally, when the graph topology becomes invalid, we call clusterTerminate to clear the cached list of plugs.

void simpleEvaluator::clusterTerminate(

const MCustomEvaluatorClusterNode* cluster

)

{

if (fControllerPlugs.length() > 0)

fControllerPlugs.clear();

}Since simpleEvaluator claims control over the entire graph, markIfSupported returns true for all nodes. Additionally, nothing special is done to alter the cluster’s scheduling behavior.

bool simpleEvaluator::markIfSupported(const MEvaluationNode* node)

{

return true;

}

MCustomEvaluatorClusterNode::SchedulingType

simpleEvaluator::schedulingType(const MCustomEvaluatorClusterNode* cluster)

{

return cluster->schedulingType();

}See the provided simpleEvaluator devkit example for more details and complete source code.

New methods were added to MPxCustomEvaluator in Maya 2022 to control at runtime (i.e. without repartitioning) execution of parts of the Evaluation Graph.

Those methods provide the API interface to prune the execution of the nodes claimed by a custom evalautor. If the execution of a node is pruned, then its downstream nodes will be pruned automatically since the data they depend on will not have been computed. If an object is pruned, VP2 will not display the object. If the plug-in wants the pruned objects to show up in VP2 for some reason, it has to define the environment variable MAYA_RENDERING_IF_PRUNED.

There are two specific methods for pruning execution:

MPxCustomEvaluator::wantPruneExecution()MPxCustomEvaluator::pruneExecution(const MCustomEvaluatorClusterNode* cluster)The pruning process involves several phases:

Phase 1: Claiming clusters : The claiming process in the partitioning phase (MPxCustomEvaluator::markIfSupported()) should include all the candidate nodes that might be pruned. A candidate is not guaranteed to be pruned because that decision is made at every evaluation on the fly. The claiming process can claim all the nodes to be pruned, but a more efficient way is to claim only the most upstream nodes, i.e. anim curve nodes, and let the evaluation manager prune its downstream nodes.

Phase 2: Enabling the pruning process : The pruning process is off by default and can be toggled on by MPxCustomEvaluator::wantPruneExecution(). This method is called every time a cluster is created for this evaluator during partitioning. If this is not overridden or returns false, pruning runtime checking will not happen every frame, so execution is to happen all the time.

Phase 3: Pruning process : If the pruning process is enabled, for each cluster created by the custom evaluator MPxCustomEvaluator::pruneExecution() is called at each evaluation to notify the evaluation manager if the given cluster should be pruned or not. MPxCustomEvaluator::pruneExecution() is not called if MPxCustomEvaluator::wantPruneExecution() returns false or is not overridden. If a cluster node is not pruned, the custom evaluator is responsible for managing its evaluation.

We provide a sample using the execution pruning API described above. It uses the concept of a PruneSet as criteria to determine whether or not to prune execution. This is just an example of the custom logic that could be used to prune execution.

See the provided evaluationPruningEvaluator devkit example for more details and complete source code.

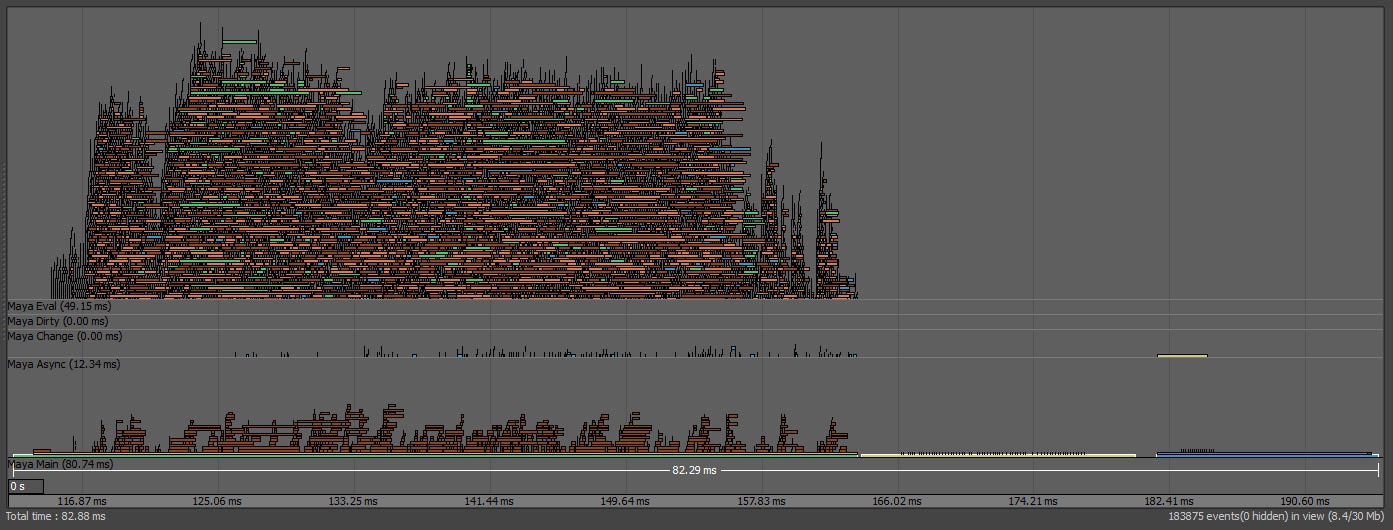

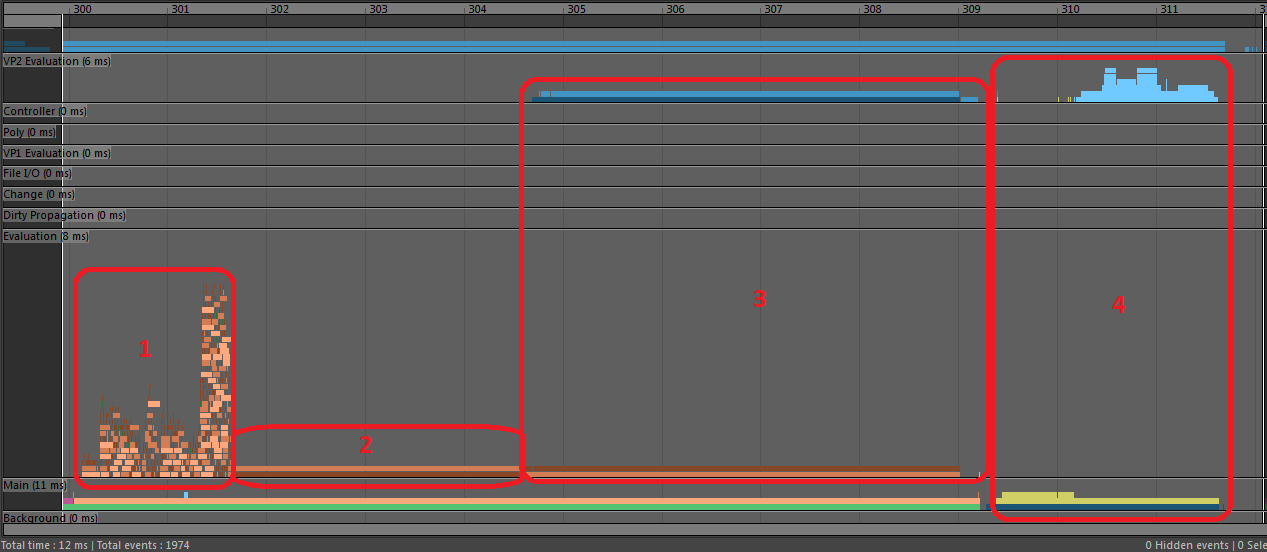

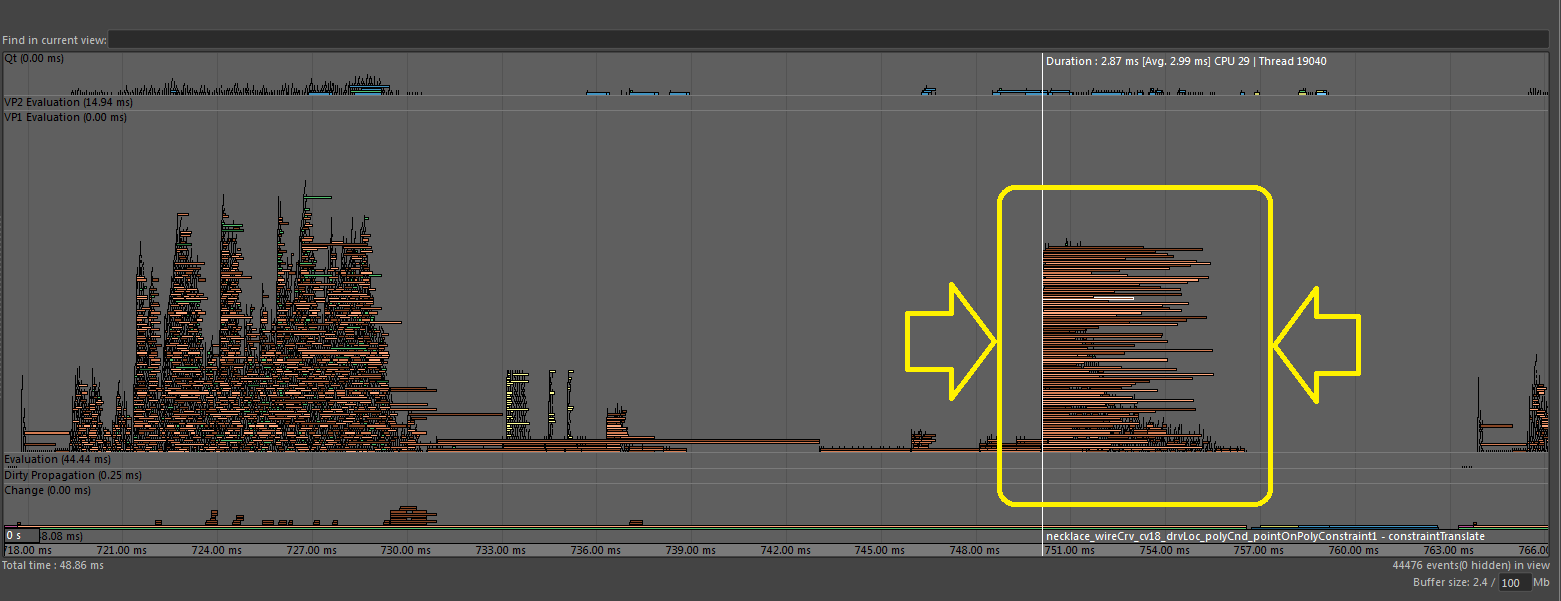

Evaluation Manager Parallel Evaluation executes the Dependency Graph in parallel. Internally, Maya nodes begin preparing render data for VP2 immediately after a node is evaluated, and before the rest of the graph has finished evaluation. This is exposed to users as Evaluation Manager Parallel Update in the MPxGeometryOverride API (this feature may also be referred to as “Direct Update”). Supporting Evaluation Manager Parallel Update can significantly reduce time spent in Vp2BuildRenderLists and improve overall scene performance.

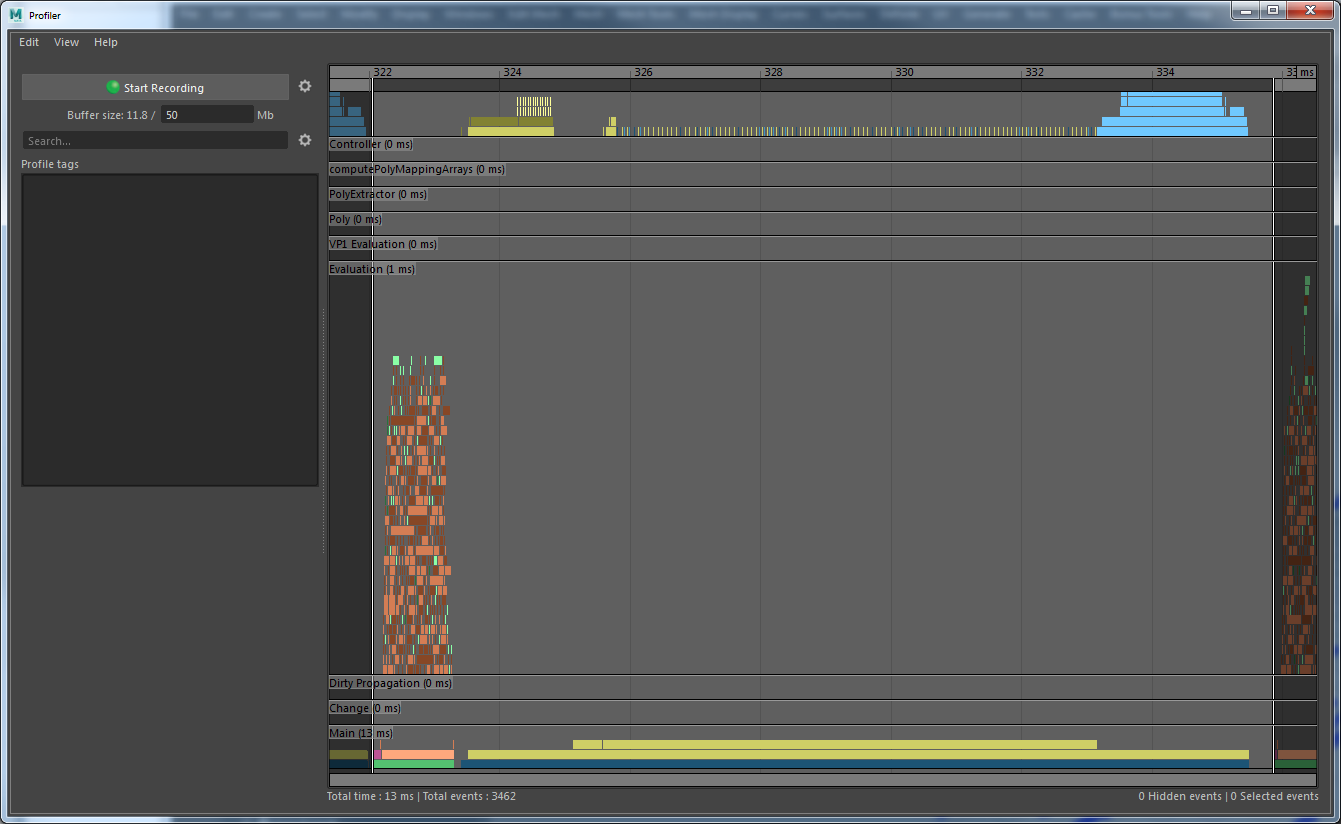

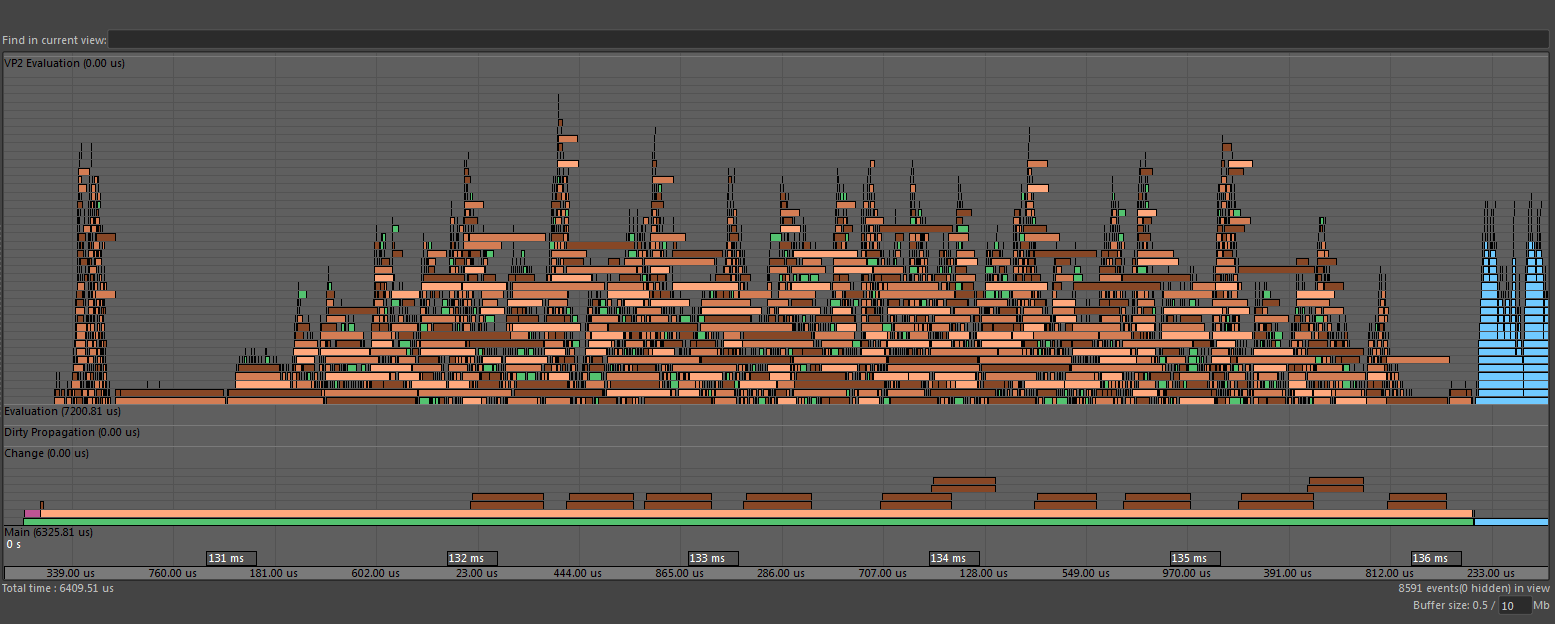

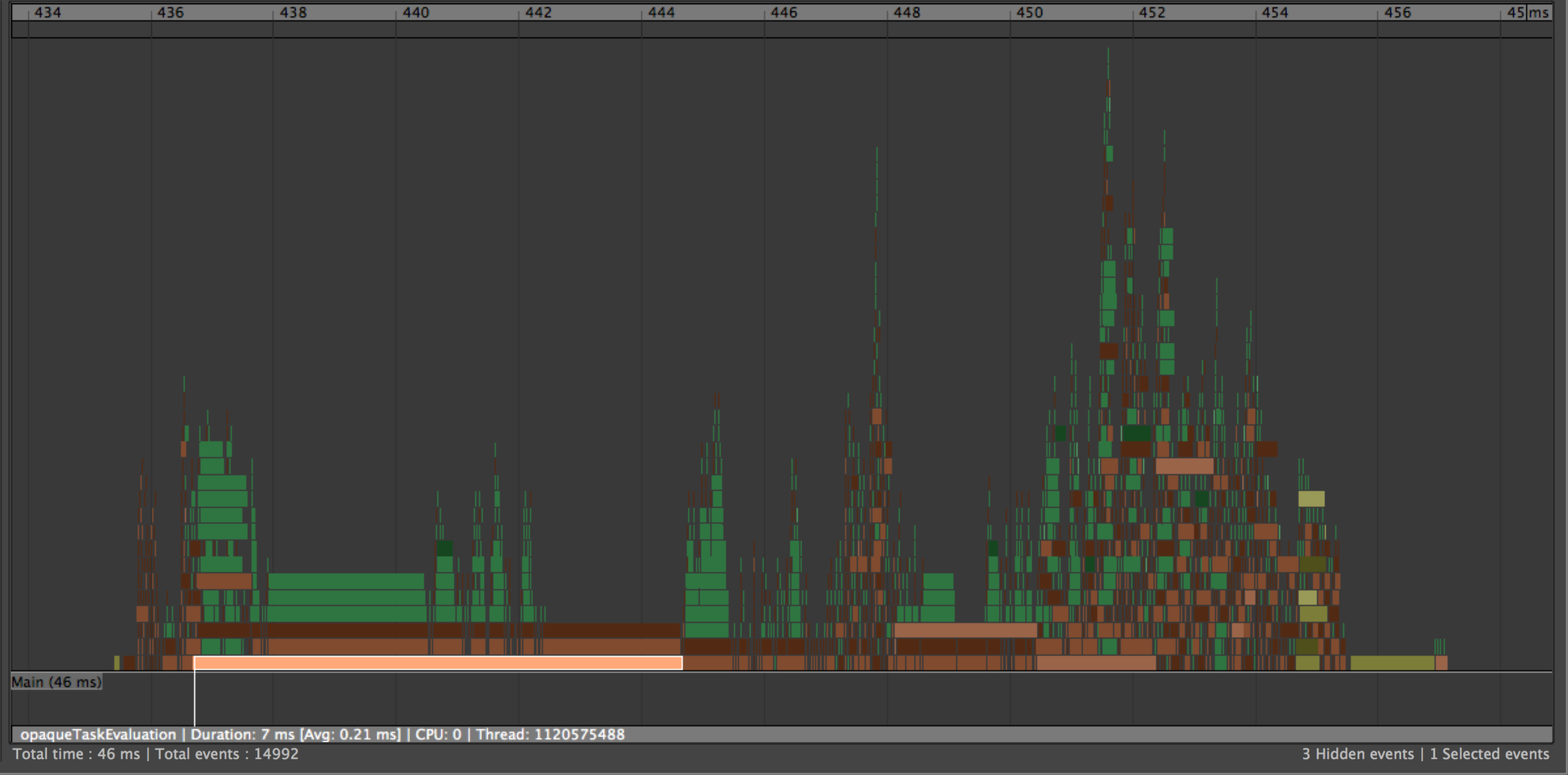

The following profiler images were created from the same scene (100 footPrintNode_GeometryOverride nodes with animated “size” attributes). In the first image Evaluation Manager Parallel Update is not enabled, and a large amount of time is spent serially preparing draw data for each footPrint node in Vp2BuildRenderLists.

In the second image the footPrintNode_GeometryOverride has been modified to support Evaluation Manager Parallel Update. You can see that the long serial execution time in Vp2BuildRenderLists has been eliminated. All the data marshalling for VP2 is occurring in parallel while the Evaluation Manager is evaluating the Dependency Graph.

The footPrintNode_GeometryOverride example plug-in provides a detailed example for you to create an efficient MPxGeometryOverride plugin which supports Evaluation Manager Parallel Update and gives excellent performance in VP2.

Supporting Evaluation Manager Direct Update adds some restrictions to which operations can safely be performed from MPxGeometryOverride function calls. All MPxGeometryOverride functions (except cleanUp() and the destructor) may be called from a worker thread in parallel with other Maya execution. These methods must all be thread safe. An MPxGeometryOverride object is guaranteed to have at most one of its member functions called at a time. If two different MPxGeometryOverride objects “A” and “B” both require updating, then any member function on “A” could be called at the same time as any member function on “B”.

Furthermore, because these methods may be called from a worker thread, direct access to the rendering context is prohibited. MVertexBuffer and MIndexBuffer can still be used, but some of their features are prohibited from use when in Evaluation Manager Parallel Update. Details about which features are safe to use are provided in the documentation for MVertexBuffer and MIndexBuffer.

Evaluation Manager Parallel Update currently has the limitation that it can only be used on geometries that do not have animated topology. The status of whether topology is animated or not needs to be tracked from the geometry’s origin to its display shape.

If the nodes in the graph are built-in nodes, Maya can know if an animated input will affect the output geometry topology. Similarly, deformers (even custom ones derived from MPxDeformerNode), are assumed to simply deform their input in their output, keeping the same topology.

However, more generic nodes can also generate geometries. When a custom node is a MPxNode, Maya cannot know whether an output geometry has animated topology. It therefore assumes the worst and treats the topology as animated. While this approach is the safest, it can prevent optimizations such as Evaluation Manager Parallel Update.

As of Maya 2019, a new API has been added to inform Maya about attributes that might not affect the topology of an output geometry.

Using this new API helps Maya to know that it is safe to use Evaluation Manager Parallel Update and benefit from its performance boost in more situations.

To visualize how long custom plug-ins take in the new profiling tools (see Profiling Your Scene) you will need to instrument your code. Maya provides C++, Python, and MEL interfaces for you to do this. Refer to the Profiling using MEL or Python or the API technical docs for more details.

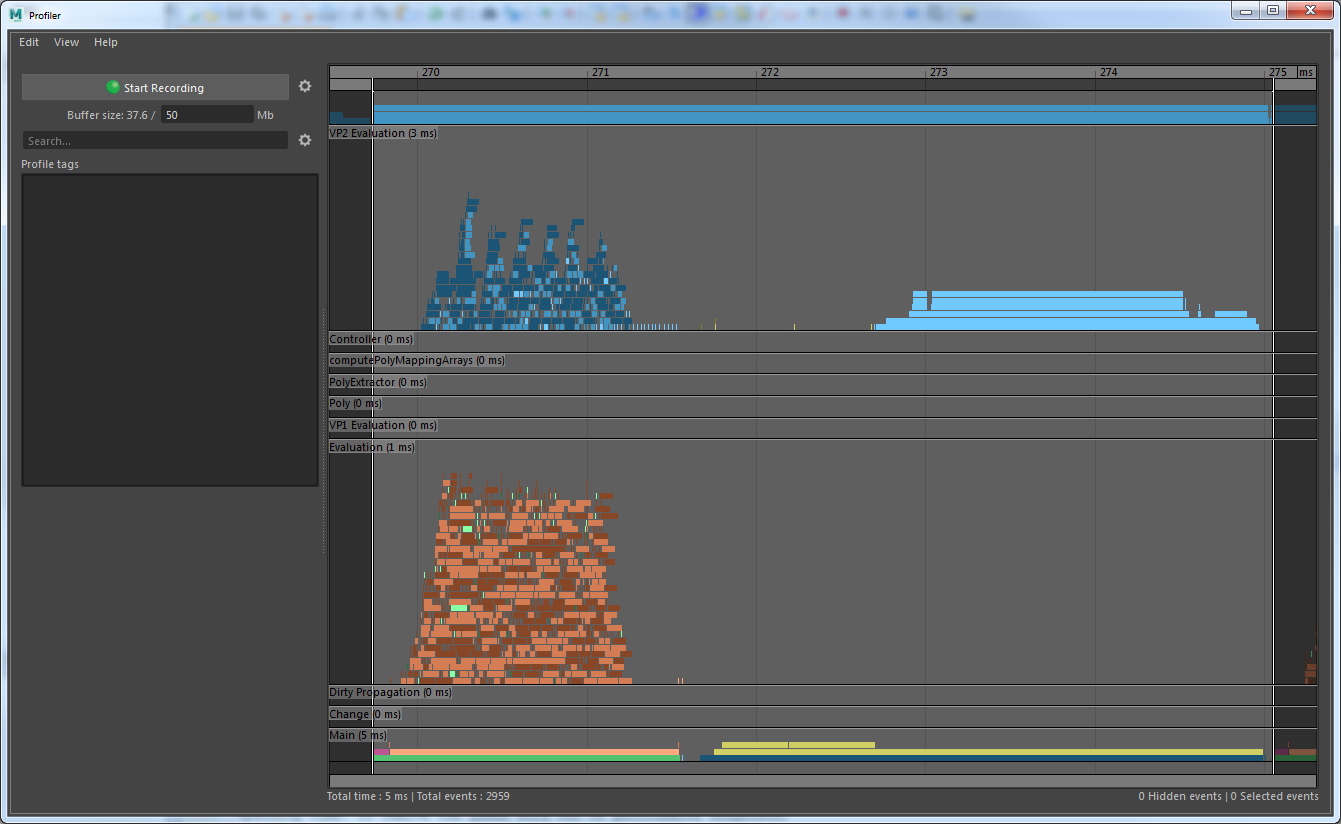

In the past, it could be challenging to understand where Maya was spending time. To remove the guess-work out of performance diagnosis, Maya includes a new integrated profiler that lets you see exactly how long different tasks are taking.

Open the Profiler by selecting:

Once the Profiler window is visible:

Tip. By default, the Profiler allocates a 20MB buffer to store results. The record buffer can be expanded in the UI or by using the

profiler -b value;command, where value is the desired size in MB. You may need this for more complex scenes.

The Profiler includes information for all instrumented code, including playback, manipulation, authoring tasks, and UI/Qt events. When profiling your scene, make sure to capture several frames of data to ensure gathered results are representative of scene bottlenecks.

The Profiler supports several views depending on the task you wish to perform. The default Category View, shown below, classifies events by type (e.g., dirty, VP1, VP2, Evaluation, etc). The Thread and CPU views show how function chains are subdivided amongst available compute resources. Currently the Profiler does not support visualization of GPU-based activity.

Now that you have a general sense of what the Profiler tool does, let’s discuss key phases involved in computing results for your scene and how these are displayed. By understanding why scenes are slow, you can target scene optimizations.

Every time Maya updates a frame, it must compute and draw the elements in your scene. Hence, computation can be split into one of two main categories:

When the main bottleneck in your scene is evaluation, we say the scene is evaluation-bound. When the main bottleneck in your scene is rendering, we say the scene is render-bound.

Each event recorded by the profiler has an associated color. Each color represents a different type of event. By understanding event colors you can quickly interpret profiler results. Some colors are re-used and so have different meanings in different categories.

We can’t see every different type of event in a single profile, because some events like Dirty Propagation only occur with Evaluation Manager off, and other events like GPU Override CPU usage only occur with Evaluation Manager on. In the following example profiles we will show DG Evaluation, Evaluation Manager Parallel Evaluation, GPU Override Evaluation, Evaluation Cached Evaluation and VP2 Cached Evaluation.

Through these examples we’ll see how to interpret a profile based on graph colors and categories, and we’ll learn how each performance optimization in Maya can impact a scene’s performance. The following example profiles are all generated from the same simple FK character playing back.

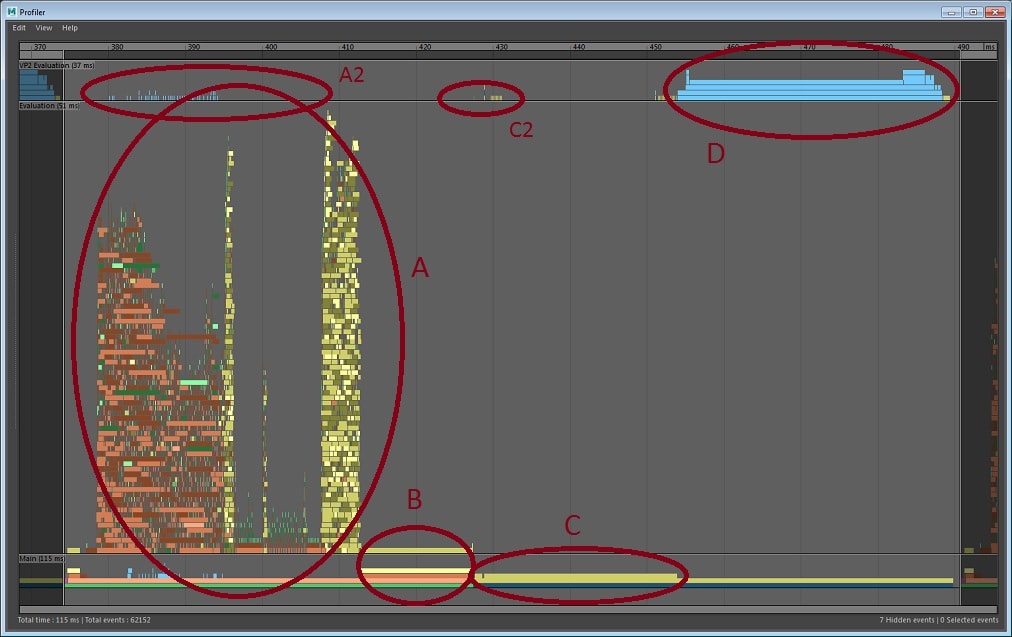

In this profile of DG Evaluation we can see several types of event.

A significant fraction of each frame is spent on Dirty Propagation, a problem which is alleviated by Evaluation Manager.